How Making an iOS Application Inspired Roboflow

Before the Roboflow team was making tools for improving how developers apply computer vision to their problems, we were making our own computer vision applications. One of those applications, Magic Sudoku, even won Product Hunt's Augmented Reality App of the Year. Another one of these applications, BoardBoss, is available entirely for free in the iPhone App Store.

In this post, we'll walk through the steps we took to go from raw images to a working mobile application that improves how you play Boggle!

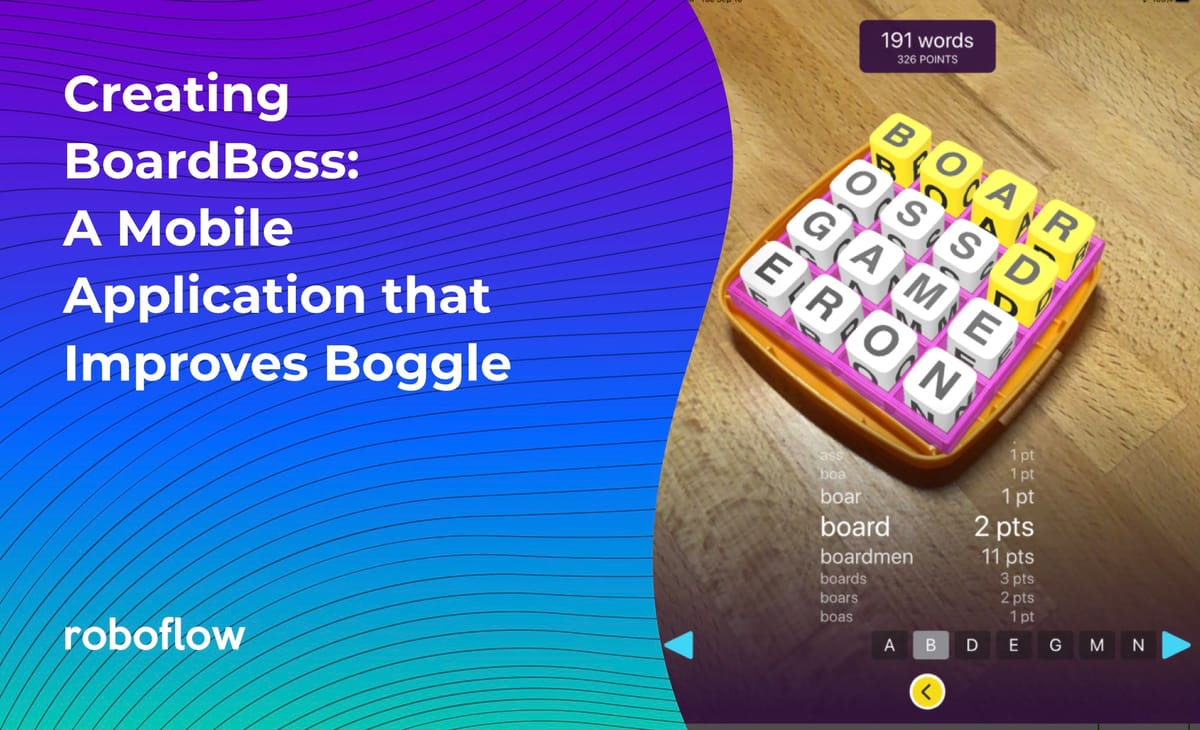

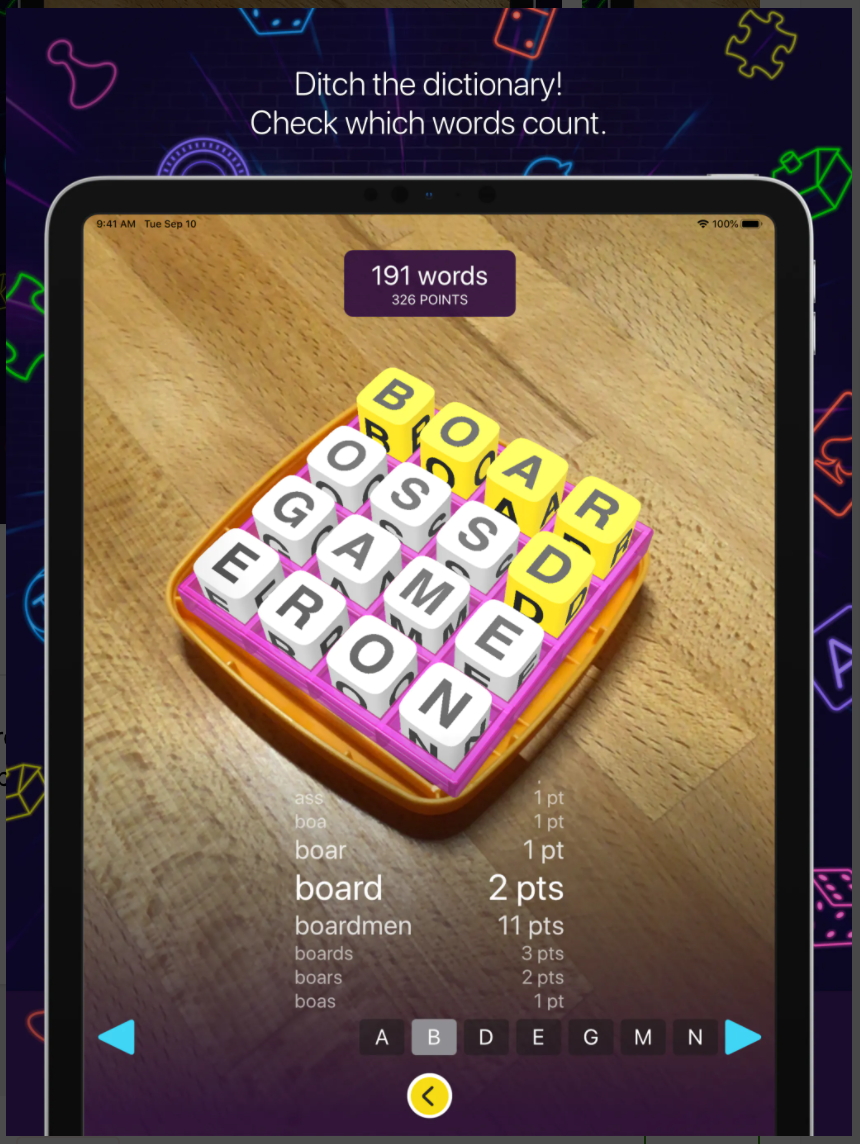

What is BoardBoss?

BoardBoss is an application that improves the classic game, Boggle. Boggle is a word game where a random assortment of letters are presented in a grid. Players have one minute to identify all possible words that can be constructed from adjacent letter tiles. At the conclusion of one-minute, word are tallied based on their length, and whichever player has earned the most points wins!

There's one key problem in Boggle, however: at the conclusion of the game, if there are words players have not found, they are never known!

This is where BoardBoss comes in. BoardBoss is a smartphone app that scans the state of the board, identifies all potential solutions, and overlays those solutions in augmented reality on a user's smart phone.

Building BoardBoss

To build BoardBoss, we had to build a computer vision model that successfully identified letters in any orientation. We then wrote an application that used that model's outputs to find potential words and overlay those outputs on the state of the board.

This post will focus on building the computer vision model: data collection, labeling, preprocessing, augmentation, model training, and model export.

Data Collection

Boggle Boards are typically 4x4 word tiles, but they can come in 5x5 or even 6x6. Moreover, letter tiles can be any letter in the alphabet and at any orientation relative to the camera position. Some variations of Boggle include word tiles like wild card spaces or blockers. All told, there are over 30 classes of different letters possible!

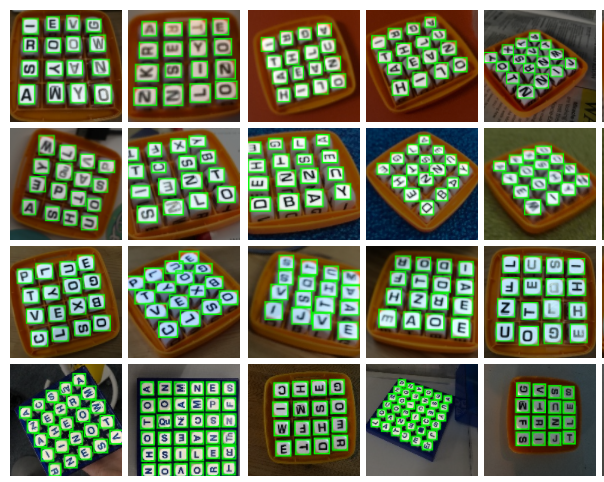

The Roboflow team collected 355 images, predominantly from 4x4 word game tiles. Critically, because we were building an iPhone app that could work on iPhone 7 to iPhone 11, we needed to collect images from each device type to account for the varying quality of photo a user's smartphone would capture. Moreover, we aimed to capture Boggle boards in different conditions, varying the tabletop and lighting conditions.

A sampling of our collected images, deliberately including some blur and various angles.

Data Labeling

Next, we needed to label our 359 images. Specifically, we needed to label the individual word tiles on each board – a bit of a painstaking task!

We're impartial to Roboflow Annotate. You can create an account for free and use datasets from Roboflow Universe to label and annotate data for your computer vision projects.

In labeling, we were sure to label the tops of the cubes, entirely encircle letters, and do our best to label even blurred examples. We knew that our users wouldn't necessarily have the Boggle board perfectly in focus or even in the center of the image, so it was important for our training data to reflect that.

The Roboflow team regularly struggled to coordinate who had labeled what, if we had sufficient data from each iPhone device we sought to support, and if we were making typos in our labeling process (spoiler: we were). These are some of the learnings that we baked into Roboflow: seamless dataset management across teams with automated annotation checks.

All told, we added 7,110 annotations to our images!

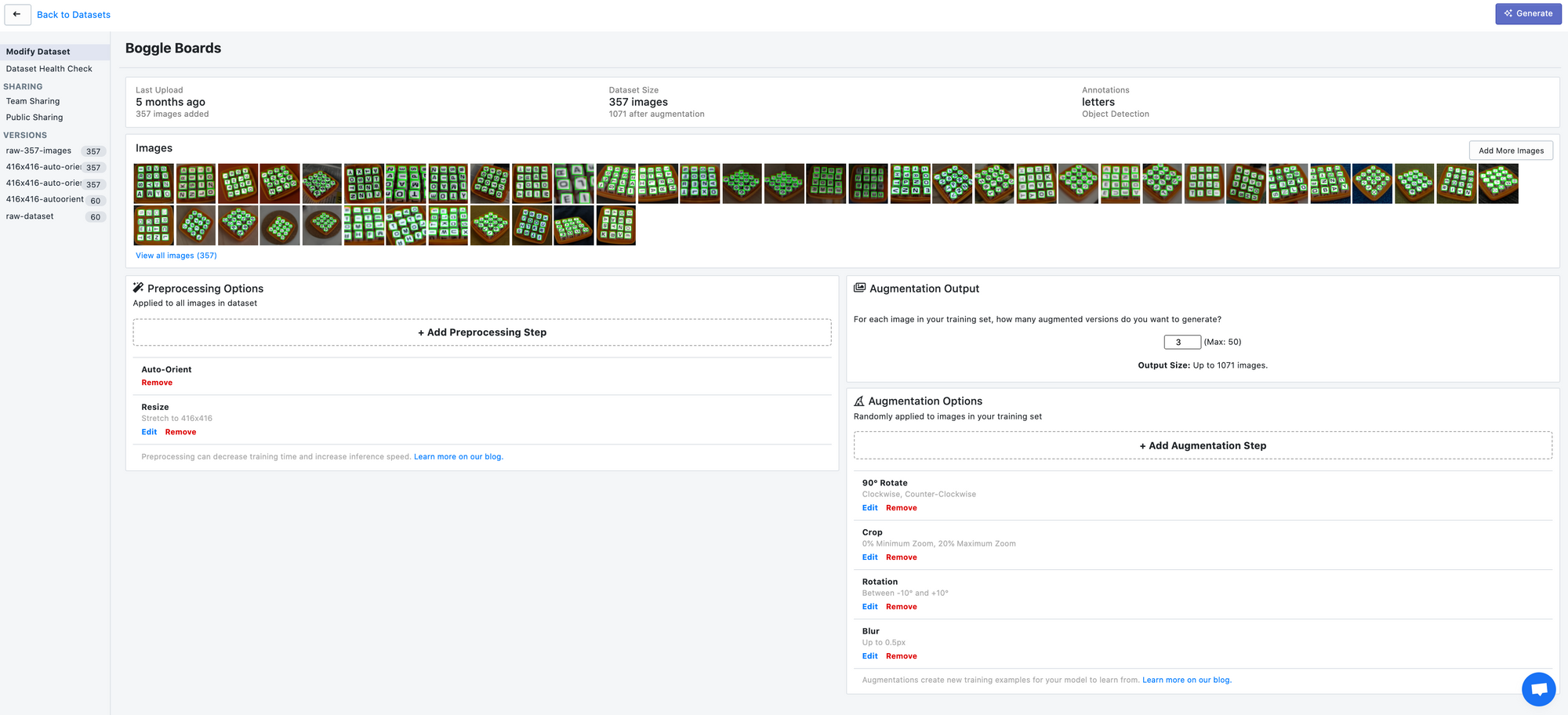

Data Preprocessing and Augmentation

Once we had labeled data, we still needed to turn our labeled images into something our model could use. What's more, we sought to add deliberate variation to our dataset to account for the varied conditions our users would encounter.

Thus, we wrote a bunch of Python scripts to resize our images into squares, handle EXIF data and auto orientation, contrasting our images, and experiment with grayscale as a preprocessing step.

We also experimented with different augmentation technique to increase variation in our dataset. Things like randomly rotating our images, randomly cropping our images, adding image noise, and more.

We diligently tracked which datasets appeared to better maximize performance to use augmentations that would yield the best performing application. (This did lead to a sprawling set of boggle_dataset_version_2_final_for_real type datasets!)

Now, anyone can try dozens of preprocessing and augmentation techniques on their versioned datasets with a single click.

Model Training and Export

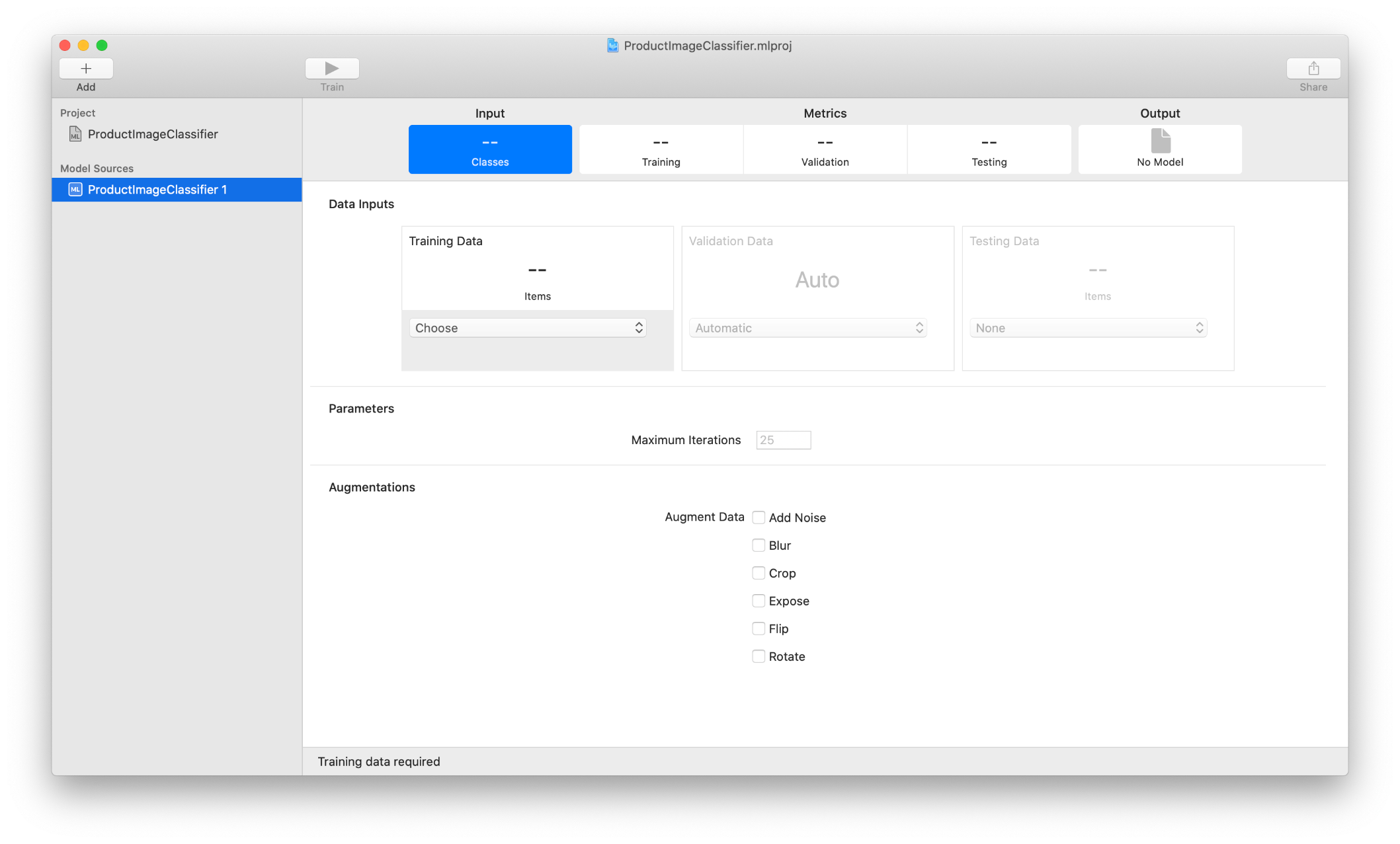

To create a mobile friendly application, we initially leaned heavily into using CreateML for model training. CreateML is an application that comes default with Xcode for training iPhone-ready machine learning models in a graphical user interface. Users can easily import their data, train, and get back a .mlmodel file to drop into their application.

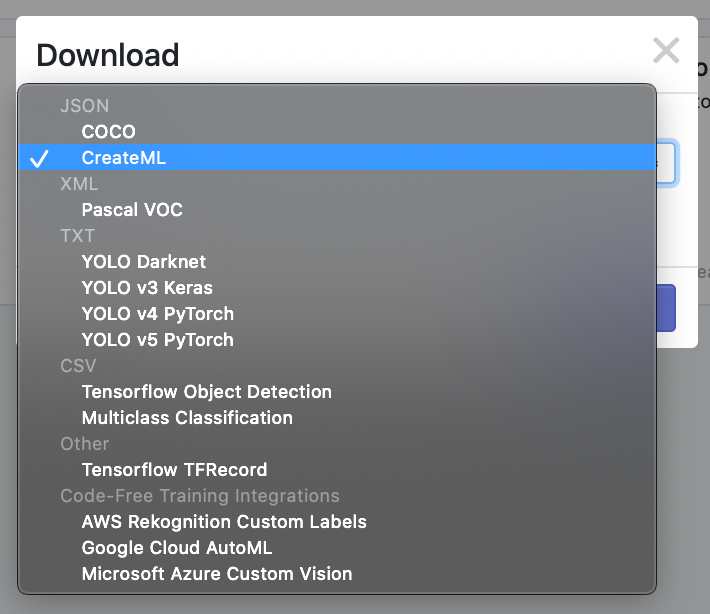

Except – getting data from the annotation format we labeled our data in (VOC XML) into CreateML's JSON format wasn't something that was so easy. We wrote a script that converted VOC XML to CreateML JSON. Again, this was a task we felt was distracting from our core task: if our goal was to build a mobile application that improves Boggle, why were we spending time writing file conversion tooling? It felt like an author converting a PDF to a Word Doc instead of writing.

This is yet another feature we baked into Roboflow: import data in any format (COCO JSON, TensorFlow CSV, YOLO .txt, etc and export it into CreateML JSON or any other format.)

After leaving our laptop running for a weekend, we had a CreateML model that we deemed sufficiently good (94% accuracy) for shipping an initial application

Launching BoardBoss

Once we had a working model, we worked hard on interpreting the model's outputs and overlaying the results in augmented reality. It was time to launch!

We shared BoardBoss on ProductHunt, earned 150 upvotes, and a few hundred downloads over the course of the next couple weeks.

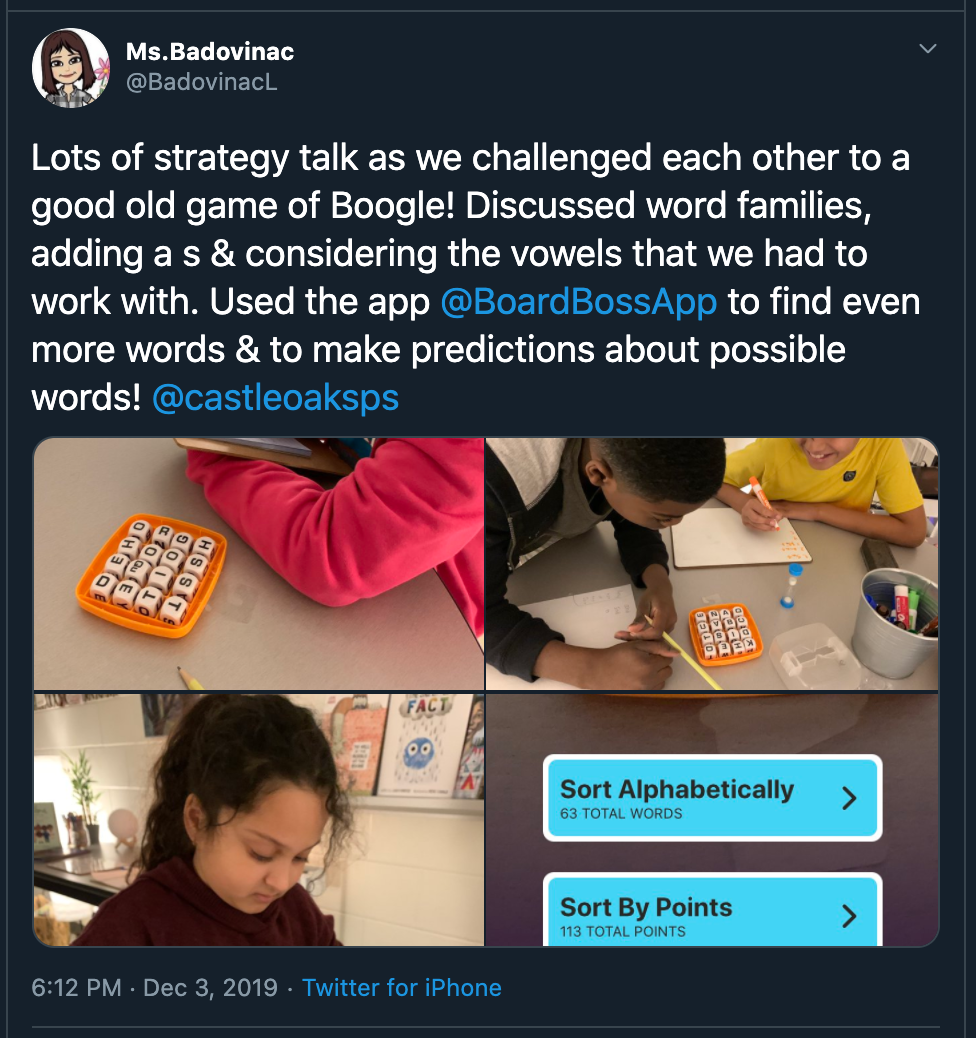

While we didn't see BoardBoss catch fire in the same way Magic Sudoku did (on the front page of Reddit and Imgur), we did receive exciting feedback we didn't anticipate. For example, a class in Canada incorporated BoardBoss into their elementary school education to help students build vocabulary:

Build Your Own BoardBoss

We're inspired by the limitless potential there is for computer vision in all parts of life – board games to zoo trips and everything in between.

To aid in your own curiosity and exploration, we've open sourced all of the labeled data required to build your own BoardBoss on Roboflow Public datasets for computer vision.

The steps we took here are easily reproducible with free tools: collecting data, labeling with Roboflow, handling computer vision data in Roboflow, and training in CreateML.

We've also developed an iOS CashCounter application using the new Roboflow Mobile iOS SDK. We've made it easy to deploy to iOS and we've outlined the steps in the Roboflow Github and iOS deployment feature release.

In sharing our story, we hope to inspire future applications as well as provide insights into what inspired us to make Roboflow.

We cannot wait to see what you build!

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Jun 25, 2020). Creating BoardBoss: A Mobile Application that Improves Boggle. Roboflow Blog: https://blog.roboflow.com/creating-boardboss-a-mobile-application-that-improves-boggle/