Drones are enabling better disaster response, greener agriculture, safer construction, and so much more. Increasingly, drones are even achieving the ability to perform these tasks completely or semi-autonomously – enabling greater precision and efficiency.

Thus, when Victor Antony, a data scientist from the University of Rochester, was confronted with creating the software to empower a drone to fly autonomously, he knew it'd an exciting challenge.

How to Train Your Drone

Victor sought to build an object detection model that would successfully identify gates through which a drone would need to fly. In this controlled environment, one team member would be responsible for creating a successful computer vision model for drone navigation while other team members would focus on using that location to change the drone's positioning.

Victor started with 300 captured images of gates in a warehouse environment.

Upon having his images labeled, Victor identified key gaps in his data collection and training pipeline. Namely, all images captured were from the same orientation, whereas the drone autopilot could confront a gate at any rotation – not to mention different lighting conditions, various distances from a gate (altering perspective), and blurs.

Moreover, the raw images were not yet in a format that any labeling framework could easily use. PyTorch would call, predominantly, for COCO JSON. YOLO Darknet would required Darknet .txt files. TensorFlow would require TFRecord files. Given various open source implementations across these frameworks, setting up a training pipeline for each one to test baseline model performance would inhibit focus on the core domain problem: teaching a drone to identify gates.

“I wasn’t sure which framework I was going to use, so I was able to experiment with multiple ones in Roboflow.”

Victor used Roboflow to resolve both of these issues.

First, he leveraged image augmentation to transform his 300 images into a dataset of 900 diverse examples, including various rotations, lighting conditions, and blurring. Moreover, Roboflow preprocessing assured all images were resized consistently.

Second, using Roboflow's one-click annotation formats, Victor was able to seamlessly experiment with PyTorch and TensorFlow models. His time was focused on identifying model performance for his domain and the tradeoffs in real-time output and not writing conversion scripts.

The Results

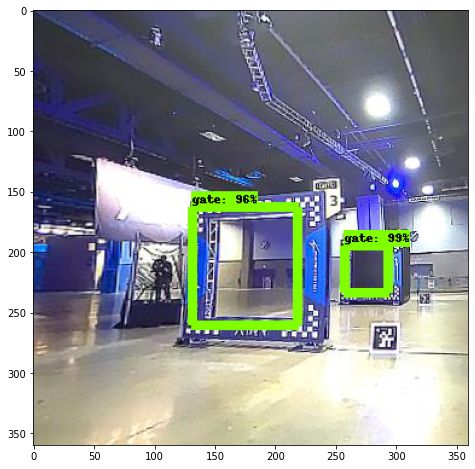

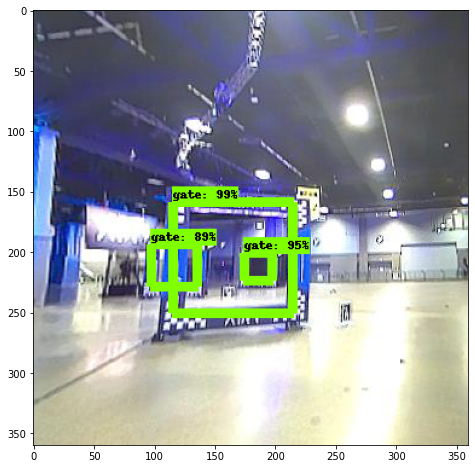

Victor found TensorFlow's MobileNetSSD to be most performant, available in the Roboflow Model Library.

In fact, the model was so performant, it found 100 percent of gates in Victor's holdout test set. "I was astonished with the seamless performance – I wasn't sure it was correct given the performance."

Example outputs from Victor's MobileNetSSDv2 model trained to identify gates. (Credit: Victor Antony)

Victor Antony's results aren't one-off, and his process is repeatable. If you're wrestling with setting up your computer vision processing pipeline, try Roboflow for free today.

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Jun 3, 2020). Teaching a Drone to Fly on Auto Pilot with Roboflow. Roboflow Blog: https://blog.roboflow.com/drone-computer-vision-autopilot/