In this guide, we explore how we can fine-tune a fully open-source, small vision language model, Moondream2, using a computer vision dataset to count items, a task at which GPT-4V has been inconsistent, and do it in a way so we can rely on the output for use in a production application.

Vision language models (VLMs), sometimes referred to as multimodal models, have grown in popularity. With the advent of technologies like CLIP, GPT-4 with Vision, and other advancements, the ability to query questions from visual inputs has become more accessible than ever before.

VLMs are a new frontier in machine learning and their performance is continually improving as new breakthroughs occur. As we have found with GPT-4 with Vision and more recently with GPT-4o, there are some tasks, like counting, where VLMs struggle. While understandable, since excelling at every single task is difficult given the constraints of training costs and inference speeds, the lack of expert-ability makes it difficult to use and rely on VLMs for production use cases.

While some multimodal models are better than others, many experience issues with outputting a consistent, parsable format. This creates a challenge for incorporating VLMs into applications and systems.

What is Moondream2

Moondream2 is an open-source small vision language model with source code on GitHub, made by “vikhyatk”. Although not a state-of-the-art model, its ability to run locally on-device with reasonable speed and accuracy makes it a compelling option as a VLM, and worth experimenting with to fine-tune to see if it works for your use case. For comparison with other VLMs, it scores relatively high. It even beats the recently released GPT-4o on VQAv2, which is impressive considering the local, open-source and much smaller size model of Moondream2.

Compared to another recently released multimodal open VLM by Google called PaliGemma, this model is a much smaller 1.86 billion, compared to the 8 billion of PaliGemma. GPT-4o and Gemini 1.5 Pro are suspected to be significantly larger than these two, but their exact sizes are unknown.

Moondream2 Open-Source Licensing

Unlike some “open” models, including PaliGemma, which have been scrutinized for restrictive terms, Moondream2 is open-source under the Apache 2.0 license, allowing commercial use as well.

How to Fine-tune Moondream2

For this guide, we will modify a version of the fine-tuning notebook provided by the creator, and improve Moondream2’s performance when used to count different types of US currency.

To get started, install packages that we will need throughout the process.\

!pip install torch transformers timm einops datasets bitsandbytes accelerate roboflow supervision -q

Collect Data for Fine-tuning Moondream2

One of the challenges that exist with creating any type of machine learning model is getting quality training data.

Since we want to fine-tune Moondream2 to count coins and bills, we will use this dataset from Roboflow Universe. It’s also possible to build and use your own Roboflow project to do this.

While this is an object detection dataset, we will show how it is possible to use it to finetune a VLM.

First, download the dataset from Universe:

from roboflow import Roboflow

from google.colab import userdata

rf = Roboflow(api_key=userdata.get('ROBOFLOW_API_KEY'))

project = rf.workspace("alex-hyams-cosqx").project("cash-counter")

version = project.version(8)

dataset = version.download("coco")Then, we create a helper class to use during fine-tuning. We use Supervision to import the dataset from the COCO format we downloaded it in.

from torch.utils.data import Dataset

import json

from PIL import Image

import supervision as sv

class RoboflowDataset(Dataset):

def __init__(self, dataset_path, split='train'):

self.split = split

sv_dataset = sv.DetectionDataset.from_coco(

f"{dataset_path}/{split}/",

f"{dataset_path}/{split}/_annotations.coco.json"

)

self.dataset = sv_dataset

def __len__(self):

return len(self.dataset)

# ... other methods listed below (full code in Colab notebook)

Then, we get to the important step of defining our dataset. In this implementation of finetuning, the dataset is read from an object, where image is the dataset image, the array qa contains an object with a question and answer, which will define the prompt/response pair that we would like to fine-tune toward.

def __getitem__(self, idx):

CLASSES = ["dime", "nickel", "penny", "quarter", "fifty", "five", "hundred", "one", "ten", "twenty"]

# Retrieve the image/annotation info from the Supervision DetectionDataset

image_name, annotations = list(self.dataset.annotations.items())[idx]

image = self.dataset.images[image_name]

# Finds the amount of each type of currency there is from the number of annotations there are

money = {}

for class_idx, money_type in enumerate(CLASSES):

count = len(annotations[annotations.class_id == (class_idx+1)]) # Counts the number of annotations with that class

if count == 0: continue;

money[money_type] = count

# Define the prompt/answer

prompt = f"How many of each type of the currency ({', '.join(CLASSES)}) are there? Respond in JSON format with the currency type as the key and a integer count as the value."

answer = json.dumps(money, indent=2) # Formats the JSON and makes it the answer

# Return as the proper format

return {

"image": Image.fromarray(image),

"qa": [

{

"question": prompt,

"answer": answer,

}

]

}The following code retrieves the data and creates the dataset classes for each split of our data.

datasets = {

"train": RoboflowDataset(dataset.location,"train"),

"val": RoboflowDataset(dataset.location,"valid"),

"test": RoboflowDataset(dataset.location,"test"),

}Initial Testing of Moondream2

Now that we have our dataset, we can begin testing how it performs with no fine-tuning. We can initialize Moondream2 by running the following:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

DEVICE = "cuda"

FLASHATTENTION = "flash_attention_2" # "flash_attention_2" if A100, RTX 3090, RTX 4090, H100, None if CPU

DTYPE = torch.float32 if DEVICE == "cpu" else torch.float16 # CPU doesn't support float16

MD_REVISION = "2024-04-02"

tokenizer = AutoTokenizer.from_pretrained("vikhyatk/moondream2", revision=MD_REVISION)

moondream = AutoModelForCausalLM.from_pretrained(

"vikhyatk/moondream2", revision=MD_REVISION, trust_remote_code=True,

attn_implementation=FLASHATTENTION,

torch_dtype=DTYPE, device_map={"": DEVICE}

)

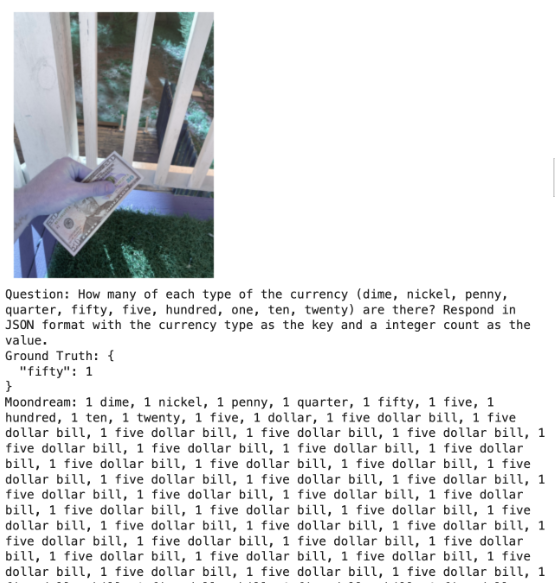

Then, we pass in a picture of a dollar bill with the prompt How many of each type of currency (dime, nickel, penny, quarter, fifty, five, hundred, one, ten, twenty) are there? Respond in JSON format with the currency type as the key and an integer count as the value.:

sample = datasets['test'][0]

md_answer = moondream.answer_question(

moondream.encode_image(sample['image']),

sample['qa'][0]['question'],

tokenizer=tokenizer,

)

sv.plot_image(sample['image'], (3,3))

print('Question:', sample['qa'][0]['question'])

print('Ground Truth:', sample['qa'][0]['answer'])

print('Moondream:', md_answer)It returned a very unhelpful, broken, and incorrect reply:

Other example responses from other images from the dataset were also not particularly helpful, correct, or consistent:

[0.39, 0.28, 0.67, 0.52]There is one silver coin in the image, which is a silver dollar coin. The coin is silver in color and features a profile of a man on it. The coin is worth one dollar.01 dime, 1 nickel, 1 penny, 1 quarter, 1 fifty, 1 five, 1 hundred, 1 ten, 1 twenty, 1 dollar bill...

After evaluating the entire test split of the dataset, it achieved roughly 0%, with none of the responses meeting the expected ground truth output.

Fine-Tuning Moondream2 for Counting Objects

Next, we finetune Moondream2 by configuring hyperparameters. Here, we set the number of epochs to 2 since our own testing corroborated that any less/more would lead to underfitting/overfitting.

The batch size was modified to take advantage of a more powerful GPU that happened to be available. For a T4 you might use in Google Colab, we recommend 6.

The rest of the parameters were left default to the creator’s implementation.

# Number of times to repeat the training dataset. Increasing this may cause the model to overfit or

# lose generalization due to catastrophic forgetting. Decreasing it may cause the model to underfit.

EPOCHS = 1

# Number of samples to process in each batch. Set this to the highest value that doesn't cause an

# out-of-memory error. Decrease it if you're running out of memory. Batch size 8 currently uses around

# 15 GB of GPU memory during fine-tuning.

BATCH_SIZE = 24

# Number of batches to process before updating the model. You can use this to simulate a higher batch

# size than your GPU can handle. Set this to 1 to disable gradient accumulation.

GRAD_ACCUM_STEPS = 1

# Learning rate for the Adam optimizer. Needs to be tuned on a case-by-case basis. As a general rule

# of thumb, increase it by 1.4 times each time you double the effective batch size.

#

# Source: https://www.cs.princeton.edu/~smalladi/blog/2024/01/22/SDEs-ScalingRules/

#

# Note that we linearly warm the learning rate up from 0.1 * LR to LR over the first 10% of the

# training run, and then decay it back to 0.1 * LR over the last 90% of the training run using a

# cosine schedule.

LR = 3e-5

# Whether to use Weights and Biases for logging training metrics.

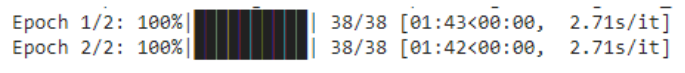

USE_WANDB = FalseOnce we kick off training, the training time will depend highly on the system, primarily the GPU, that is available to you.

Evaluating Fine-tuned Moondream2 Results

Now that we have completed the training process, we can evaluate the performance of the fine-tuned model using the same test data which was not part of the fine-tuned data.

moondream.eval()

correct = 0

for i, sample in enumerate(datasets['test']):

md_answer = moondream.answer_question(

moondream.encode_image(sample['image']),

sample['qa'][0]['question'],

tokenizer=tokenizer,

)

if md_answer == sample['qa'][0]['answer']:

correct += 1

if i < 21:

sv.plot_image(sample['image'], (3,3))

print('Ground Truth:', sample['qa'][0]['answer'])

print('Moondream:', md_answer)

print(f"\n\nAccuracy: {correct / len(datasets['test']) * 100:.2f}%")

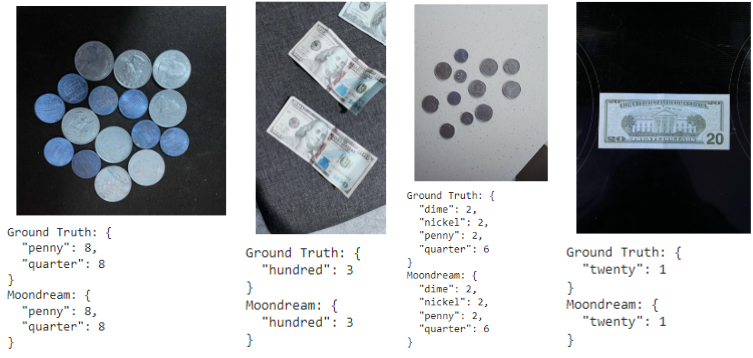

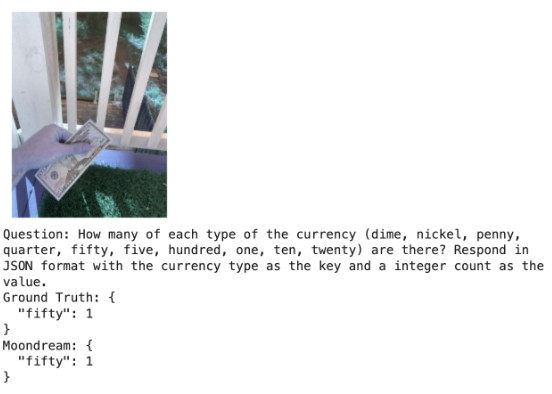

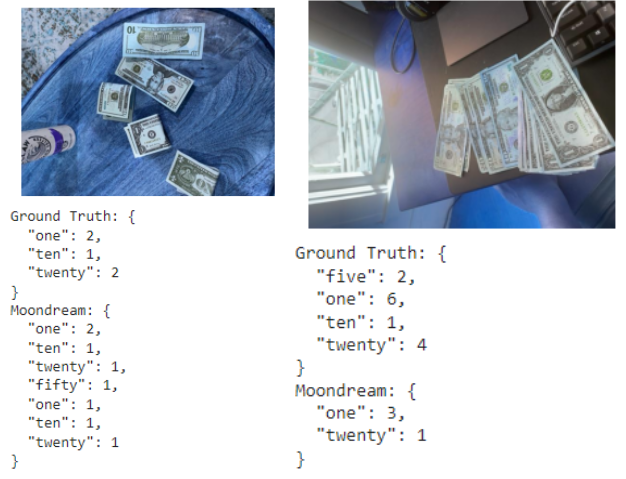

Looking at the samples, we see a much more consistent, predictable, and accurate output answer and output format.

Fine-tuned Moondream2 answered our very first test image with a much more accurate reply:

However, there are cases in which the fine-tuned version of Moondream still gets counts incorrect.

Overall, across the same testing dataset split, we got an accuracy of 85.50%.

Conclusion

Through this guide, we were able to take advantage of a computer vision dataset in order to finetune a vision language model to produce more consistent and accurate results in a format that makes it easy to parse for use in production applications. This brings VLMs from a level of an interesting experiment to a much more useful component to a larger computer vision system.

Cite this Post

Use the following entry to cite this post in your research:

Leo Ueno. (May 17, 2024). Finetuning Moondream2 for Computer Vision Tasks. Roboflow Blog: https://blog.roboflow.com/finetuning-moondream2/