Checking an object's alignment involves making sure it is exactly where it needs to be and in the correct position and direction. An object's alignment and position can require high levels of precision in various fields. For example, in manufacturing, even a small misalignment can cause defects. Similarly, in robotics, accurate positioning is needed for tasks like picking up objects.

An advanced method to automate checking the alignment of an object is using computer vision. Computer vision systems can capture images or video frames and use deep learning algorithms to accurately detect and analyze the object's position and orientation.

In this article, we’ll explore the methods of detecting alignment using computer vision, including how it works, traditional techniques, and real-world applications. We’ll also guide you through a step-by-step coding example to showcase how you can implement alignment detection in your projects. Let’s get started!

Understanding Object Alignment

Object alignment can be broken down into two main types: 2D alignment and 3D alignment. 2D alignment focuses on getting an object correctly positioned on a flat surface or within a two-dimensional plane. It’s about making sure that the object is properly oriented with respect to horizontal and vertical axes, and not tilted or shifted from its intended position. A common use for 2D alignment is image stitching, where several images are combined to create a single, seamless panorama.

3D alignment, on the other hand, deals with positioning objects in a three-dimensional space. This is trickier because it also checks that the object is properly oriented along the z-axis. Techniques like point cloud registration come into play here, and the Iterative Closest Point (ICP) algorithm is often used. It helps align 3D models or point clouds (collections of data points in 3D space) that are captured from different angles. 3D alignment is often used in robotics for precise navigation and handling of objects. It is also used in 3D reconstruction to create detailed models of objects or scenes.

Traditional Techniques for Alignment Detection

In the past, traditional image analysis methods were widely used to detect the alignment of objects. These techniques are still important today and serve as the building blocks for many modern computer vision techniques. Let's take a look at three key traditional techniques: edge detection, feature matching, and reference markers.

Edge detection can help you find the boundaries or edges of an object in an image. By identifying these edges, you can determine how an object is positioned or aligned relative to something else. This method works well when the object has clear, sharp edges. However, it can be tricky when the conditions aren't perfect. For example, when the lighting is poor, there's noise, or part of the object is hidden. One popular algorithm used for edge detection is Canny Edge Detection. Techniques like applying a Gaussian Blur before detection or using adaptive methods like Otsu's thresholding can help make edge detection more accurate.

Feature matching involves comparing specific details or key points between different images to check for alignment. It looks for unique features in one image and tries to match them with similar ones in another. However, it can be challenging when the objects don’t have strong, distinctive features or when there are big changes in scale, rotation, or lighting between the images. Common methods like Scale-Invariant Feature Transform (SIFT) and Speeded Up Robust Features (SURF) are often used for feature matching, though they can have limitations in tough conditions.

Reference markers are fixed points or features in a frame that act as anchors for measuring an object’s alignment. These markers are especially useful when you need accurate and repeatable measurements, like in 3D metrology. By placing these markers at known positions, you can accurately determine an object’s position, orientation, and scale within a 3D space.

Methods of Detecting Alignment Using Computer Vision

Computer vision can make detecting and measuring the alignment of objects simpler. With advanced algorithms and machine learning, we can accurately determine an object’s orientation, angle, and position in both 2D and 3D spaces. Let's explore three key methods used in detecting alignment: object orientation detection, angle measurement, and pose estimation.

Object orientation detection focuses on identifying key features like edges and corners to understand the object’s orientation. A common technique used here is Principal Component Analysis (PCA). PCA helps by simplifying the image data and highlighting the most important features. It finds the main directions in which the data varies (known as eigenvectors) and uses them to determine how the object is oriented. For example, PCA can analyze how pixel intensities are spread out in an image to create new axes that more accurately reflect the object’s true position.

Angle measurement can be used after determining the object’s orientation. The angle between the detected orientation and a reference line can be calculated. It is handy in situations where even a small misalignment can cause problems.

Pose estimation is a more advanced technique for determining an object’s orientation and position in three-dimensional (3D) space. It typically starts by using deep learning techniques, such as Convolutional Neural Networks (CNNs), to extract key features from an image. These features are then used to calculate the object’s 3D orientation and position relative to a camera or observer. Mathematical models, like the perspective-n-point (PnP) algorithm, help connect the 2D points from the image to their corresponding 3D coordinates.

In addition to methods like object orientation detection, angle measurement, and pose estimation, you can also use simpler approaches like object detection and logical checks to determine alignment. For instance, you can set up predefined zones within an image or space and check if the detected objects are positioned correctly within these zones. In the next section, we’ll take a closer look at how this works.

Detect Object Alignment: How To

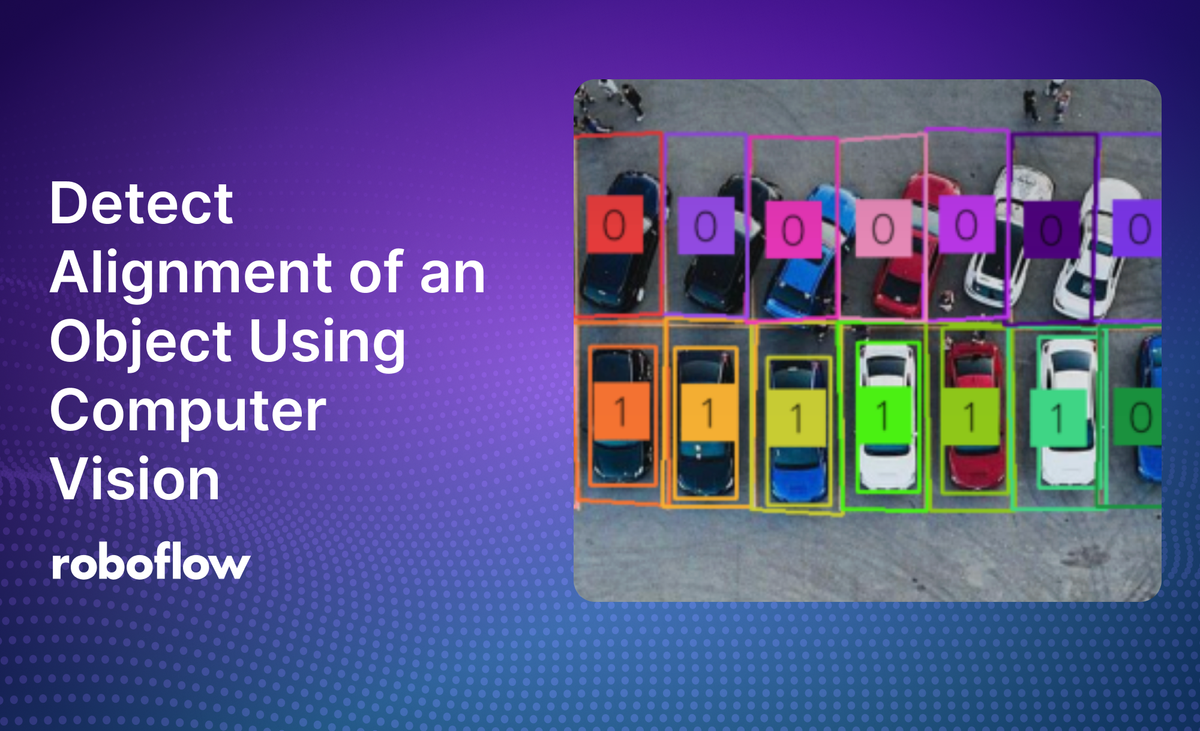

In this example, we will walk through the process of detecting the alignment of an object using computer vision. The code we'll go over is designed to detect and check if objects fall within specified polygon zones. We’ll be using this approach to see if cars are parked correctly within their spots by defining parking spaces as polygons and making sure that the detected vehicles are fully within these areas.

To try this yourself, you’ll need an image to run inferences on. We’ve used an image downloaded from the Internet. You can use the same image or use your own image. Let's dive in!

Step #1: Install Necessary Libraries and Load the Model

First, ensure you have the necessary Python libraries installed. We'll be using libraries like inference for model loading, opencv for image handling, and supervision for processing detections and annotations.

First, install the inference library.

pip install inferenceNext, import the necessary libraries:

import numpy as np

import supervision as sv

import cv2

import inferenceNext, we can load a YOLOv8 model:

model = inference.get_model("yolov8x-640")Step #2: Load the Image and Run Inferences

Load the input image and use the YOLOv8 model to detect objects within it:

image = cv2.imread("path/to/image/file")

results = model.infer(image)[0]

detections = sv.Detections.from_inference(results)Here, the image is loaded using OpenCV’s imread function. The model then processes the image, and the results are converted into a format that supervision can use to manage the detections.

Step #3: Define Polygons for Zones

In this step, we define several polygons, each represented by a list of coordinates. These polygons are vital because they define the zones where the alignment of objects will be checked. You can create these polygons using PolygonZone, a tool that allows you to draw polygons on images and retrieve the coordinates. Learn more about how to use it in this blog post.

The following code snippet defines the polygons that represent the areas where you want to check for alignment.

polygons = [

np.array([[67, 416], [141, 416], [141, 273], [67, 278]]),

np.array([[140, 416], [208, 416], [208, 279], [135, 275]]),

...

# Add more polygons as needed

]Step #4: Create Polygon Zones and Annotators

With the polygons defined, we can now create zones and corresponding annotators. We create a list of PolygonZone objects, each associated with one of the polygons. The triggering_anchors parameter is set to check all four corners of the objects, ensuring they fall within the defined zone. The PolygonZone class is a useful tool for defining these zones and lets us easily check if the detected objects align within these areas.

zones = [

sv.PolygonZone(

polygon=polygon,

triggering_anchors=(sv.Position.TOP_LEFT,

sv.Position.TOP_RIGHT,

sv.Position.BOTTOM_LEFT,

sv.Position.BOTTOM_RIGHT),

)

for polygon in polygons

]Next, we create annotators to visually mark these zones and detected objects. The PolygonZoneAnnotator and BoxAnnotator help adding visual elements to the output image. These annotators will draw the polygons and bounding boxes, and make it easy to see where the objects are and if they are aligned correctly.

zone_annotators = [

sv.PolygonZoneAnnotator(

zone=zone,

color=colors.by_idx(index),

thickness=2,

text_thickness=1,

text_scale=1

)

for index, zone

in enumerate(zones)

]

box_annotators = [

sv.BoxAnnotator(

color=colors.by_idx(index),

thickness=2

)

for index

in range(len(polygons))

]Step #5: Apply Annotations and Display the Result

Finally, we can filter the detections to only include those within the defined zones, apply the annotations, and display the resulting image.

for zone, zone_annotator, box_annotator in zip(zones, zone_annotators, box_annotators):

mask = zone.trigger(detections=detections)

detections_filtered = detections[mask]

frame = box_annotator.annotate(scene=image, detections=detections_filtered)

frame = zone_annotator.annotate(scene=frame)

sv.plot_image(frame, (8, 8))Here is the output image. As you can see, the parking spots labeled as “0” have vehicles that are not aligned properly, and the parking spots labeled as “1” have vehicles aligned and parked properly within them.

Challenges and Considerations

Detecting object alignment using computer vision is a powerful tool, but it comes with its own set of challenges. Here are a few key challenges:

- Lower Accuracy with Complex Objects: Objects with unusual shapes or intricate designs can sometimes confuse detection algorithms and lead to alignment errors. Variations in texture, color, or surface conditions can also make it harder for the algorithms to consistently identify and align objects correctly.

- Handling Unusual Cases: Algorithms might struggle with rare or unusual situations, often called "edge cases," and may need more training data to handle these scenarios effectively.

- Environmental Factors: Changes in lighting can create shadows or highlights that obscure important details and make alignment harder to detect accurately. A busy or cluttered background can also confuse the detection algorithms, and reflective or transparent surfaces can distort the object’s appearance and complicate the process.

- Real-Time Processing: When alignment detection needs to happen in real-time, like on an assembly line, the system has to process images and make decisions quickly. Real-time processing may require more expensive hardware to support the amount of processing power required.

- Integration Challenges: Integrating computer vision systems with existing machinery and workflows can be complex and requires careful planning to make sure everything works smoothly together.

Applications and Use Cases

Detecting the alignment of an object using computer vision can play an essential role in various industries to make different processes more efficient and accurate. Let’s explore how this technology is applied in different fields.

Manufacturing and Quality Control

Manufacturing is one of the main areas where object alignment detection truly shines. For example, car manufacturers like BMW use advanced alignment technologies to produce complex parts with extreme precision. Computer vision systems can help improve both the quality of their vehicles and the speed of production.

Another good example is in the pharmaceutical industry; companies like Johnson & Johnson use AI-powered systems to monitor and control manufacturing processes, leading to more consistent and higher-quality products. By automating tasks such as visual inspections, error detection, and assembly processes, computer vision helps manufacturers increase productivity, enhance safety, and reduce human errors.

Robotics

Robotics heavily depends on object alignment detection for tasks like picking items from bins, tending to machines, and assembling parts. Companies like Mech-Mind Robotics provide solutions that help robots quickly and accurately align with objects, making these tasks smoother and more efficient. Precise alignment can help robots operate reliably and perform complex tasks without human intervention.

Augmented Reality (AR)

Augmented reality benefits from object alignment detection using computer vision, especially in industrial settings. AR systems can overlay digital instructions, and alignment guides directly onto the physical workspace to help workers assemble parts with precision and reduce the chance of errors. AR also supports interactive training. Workers can practice aligning and assembling components in a virtual environment before doing it in real life. Practice improves their skills and enhances the quality of the final product.

AR Techniques in Medical and Industrial Robotic Applications. (Source)

3D Printing

With respect to 3D printing, alignment detection is critical for achieving high-quality prints. The print head and material have to be perfectly aligned to produce accurate results. Computer vision systems can monitor the printing process in real time, and help make adjustments as needed to maintain precision. After printing, these systems can detect any misalignments or defects and allow for quick corrections. Some advanced systems even combine AR with robotic 3D printing so that designers can interactively modify and align printed features in real-time.

Conclusion

Accurate alignment detection is a powerful tool that transforms how industries operate, bringing greater precision, efficiency, and product quality. By minimizing errors and automating important tasks, alignment detection increases productivity and helps maintain high-quality standards with little need for human intervention. Whether it’s in manufacturing, robotics, augmented reality, or 3D printing, checking that objects are perfectly aligned can be very useful. Embracing computer vision can open the door to new possibilities, drive innovation, and set the stage for even greater advancements in your field.

Keep Reading

- An article on error proofing in manufacturing with computer vision

- An article on building a juice box quality inspection system

- An article on how to create a workout pose correction tool

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Sep 19, 2024). Detect Alignment of an Object Using Computer Vision. Roboflow Blog: https://blog.roboflow.com/object-alignment/