Object tracking is a computer vision task that can identify various objects and track them through the frames of a video.

Knowing where an object is in a video has many real-life applications, especially in manufacturing and logistics. For example, object tracking can be used to monitor assembly lines, track inventory in warehouses, and help optimize supply chain management.

In this article, we’ll explore how object tracking has evolved over the years, how it works, and the top seven open-source object-tracking algorithms.

The Evolution of Object Tracking Softwares

Before we take a look at how object tracking works, let’s understand how it came to be.

Initially, in the late 1980s and early 1990s, object tracking depended heavily on approaches like background subtraction and frame differencing techniques. These approaches were groundbreaking but had a difficult time handling dynamic backgrounds or changes in lighting conditions.

Technology evolved with time, and in the mid to late 1990s, new approaches like feature-based tracking algorithms emerged. Soon, more complex tracking solutions rolled out that focused on specific features of objects like edges and corners.

In the 1990s, the introduction of machine learning techniques like Kalman Filtering and optical flow paved the way for advanced tracking. However, the real breakthrough came in the 2010s with the development of deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs). These AI methods make complex object tracking with much higher accuracy, robustness, and adaptability possible. Today's systems can track multiple objects simultaneously, even in challenging conditions, and can learn and adapt to new objects or environments.

How Object Tracking Software Works

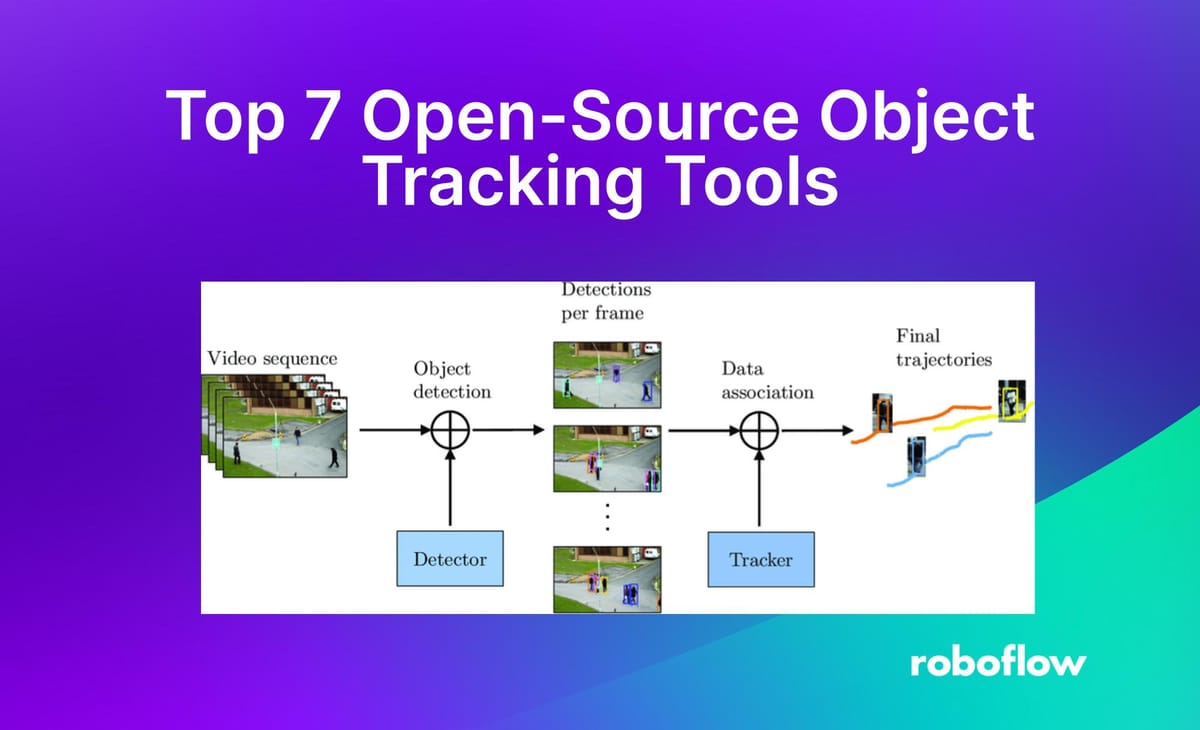

The basic concept of object tracking involves using an algorithm to detect an object in a video and predict its future position in a sequence of frames. It combines both object detection and tracking algorithms. Object detection identifies the object with a bounding box in a reference frame, while object tracking predicts its movement and follows it throughout the video. Once the object is detected, the tracking algorithm can follow its movement over time in videos through multiple camera angles and even track multiple objects simultaneously.

Here are a few key techniques involved:

- Feature Extraction: Identifying unique characteristics of an object, such as color, shape, and texture, to tell it apart from the background and other objects.

- Motion Estimation and Trajectory Prediction: Predicting the object's future position based on its movement in previous frames.

- Data Association: Assigning a unique ID to each object and making sure it remains consistent across frames, even during partial occlusions or background clutter.

- Re-identification: When the object goes out of frame or becomes temporarily obscured (like another object passing in front of it), re-identification methods help the software detect and re-establish the identity of the object when it reappears. Deep learning-based approaches like appearance matching are often used for this.

Top 7 Open-Source Object Tracking Tools

Now, let’s walk through the top 5 open-source object-tracking tools and their key features.

ByteTrack

ByteTrack is a computer vision algorithm for multi-object tracking. It assigns unique IDs to objects in a video to track each one. Most tracking methods only use detection boxes with high scores and ignore lower scores, missing out on some objects and leading to fragmented trajectories. ByteTrack improves this by using all detection boxes, from high to low scores, in its matching process.

It matches high-score detection boxes to existing tracklets based on motion similarity. Tracklets are short segments of tracked object trajectories that are used to maintain continuous tracking over time. By matching high-score detection boxes to tracklets, ByteTrack can correctly identify and track objects even when partially obscured or moving quickly. For low-score detections, ByteTrack spots objects from the background based on their similarity to existing tracklets.

Since ByteTrack can be used to track multiple objects in a video, it can be applied to scenarios like Boston Dynamics' robots stacking boxes in a warehouse. These robots use machine vision to lift, move, and place boxes with precision. ByteTrack could enhance its efficiency by accurately tracking the position and movement of each box.

Norfair

Norfair by Tryolabs is a lightweight and customizable object-tracking library that works with most detectors. Users define the function that calculates the distance between tracked objects and new detections, which can be as simple as a one-liner for Euclidean distance or more complex using external data like embeddings or a Person ReID model.

It can also be easily integrated into complex video processing pipelines or used to build a video inference loop from scratch. That makes Norfair suitable for applications like monitoring workers in a factory, surveillance, sports analytics, and traffic monitoring applications.

Norfair offers several predefined distance functions, and users can create their own to implement different tracking strategies. It powers various video analytics applications and supports Python 3.8+, with the latest version for Python 3.7 being Norfair 2.2.0.

MMTracking

MMTracking is a free and open-source toolbox designed to analyze videos. It's built using PyTorch and is part of a bigger project called OpenMMLab. MMTracking is unique because it combines multiple video analysis tasks into a single platform. These tasks include object detection, object tracking, and even video instance segmentation. With its modular design, you can easily swap these tools around to create custom methods for your specific needs.

It also works well with other OpenMMLab projects, especially MMDetection. This means you can use any object detector available in MMDetection with MMTracking by just changing a few settings. On top of that, MMTracking includes pre-built models that achieve very high accuracy, some even better than the original versions.

MMTracking is well-suited for applications requiring high accuracy and speed, like automated inspection in manufacturing and autonomous driving. Its modular design allows for easy customization, making it a powerful tool for various video analytics tasks.

DeepSORT

DeepSORT (Deep Simple Online and Real-Time Tracking) is an improved version of the SORT algorithm. It uses a deep learning feature extractor to identify various objects, making it very useful for tracking multiple objects at the same time. DeepSORT uses a Kalman filter to predict object movements. It is very good at handling occlusion and interaction between objects, making it ideal for surveillance and crowd-monitoring applications.

FairMOT

FairMOT is a tracking approach based on the anchor-free object detection architecture, CenterNet. Unlike other frameworks that treat detection as primary and re-identification (re-ID) as secondary, FairMOT treats both tasks equally. It has a simple network structure with two similar branches: one for detecting objects and another for extracting re-ID features.

FairMOT uses ResNet-34 as its backbone to achieve a good balance of accuracy and speed. It enhances this backbone with Deep Layer Aggregation (DLA) to combine information from multiple layers. Deformable convolutions in the up-sampling modules adjust dynamically to different object scales and poses, which helps solve alignment issues. The detection branch, based on CenterNet, has three parallel heads that estimate heatmaps, object center offsets, and bounding box sizes. The re-ID branch generates features to identify objects.

FairMOT is useful in scenarios requiring balanced accuracy and speed, such as real-time quality inspection on manufacturing floors, security monitoring, and autonomous vehicle navigation. Its ability to handle occlusions and dynamic object scales makes it a good choice for these applications.

BoT-SORT

BoT-SORT (Bag of Tricks for SORT) is a method for tracking multiple objects that improves on traditional methods like SORT (Simple Online and Realtime Tracking). Tel-Aviv University researchers developed BoT-SORT to be able to track objects more accurately and reliably. It combines motion and appearance information to distinguish between different objects and maintain their identities over time.

BoT-SORT also includes a feature called Camera Motion Compensation (CMC), which takes into account camera movement so that object tracking remains accurate even when the camera is not stationary. Additionally, BoT-SORT uses a more advanced Kalman filter that can more precisely predict the positions and sizes of tracked objects. These improvements help BoT-SORT perform exceptionally well, even in challenging situations with lots of movement or crowded scenes. It ranks first in key performance metrics like MOTA (Multiple Object Tracking Accuracy), IDF1 (Identity F1 Score), and HOTA (Higher Order Tracking Accuracy) on standard datasets.

BoT-SORT is a good choice for industrial applications due to its high accuracy and robustness in tracking. In environments like large warehouses, where lighting conditions can change, and other items can often occlude objects, BoT-SORT can still perform well. It can be used to track packages and pallets to improve inventory management and reduce the risk of lost items. Its ability to monitor the movement of goods from receiving to shipping helps streamline operations and enhance supply chain visibility. Precise tracking supports logistics operations with real-time updates on the location and status of each item.

StrongSORT

StrongSORT is designed to improve upon the classic DeepSORT algorithm. It was developed to solve common issues in multi-object tracking, like detection accuracy and object association. StrongSORT uses a robust object detector, a sophisticated feature embedding model, and several tricks to improve tracking performance. It also introduces two new algorithms: AFLink (Appearance-Free Link) and GSI (Gaussian-smoothed Interpolation), which help track objects more accurately without relying heavily on appearance features.

AFLink and GSI are lightweight and easy to integrate into various tracking systems. AFLink helps link tracklets together using only spatiotemporal information, making it both fast and accurate. GSI improves how missing detections are handled by considering motion information. These improvements allow StrongSORT to achieve top results on several public benchmarks, including MOT17, MOT20, DanceTrack, and KITTI.

Thanks to StrongSORT's accuracy and robustness, it can be used in applications where objects need to be tracked very carefully. For example, let's say there's a camera that gives a bird's eye view of a construction site, where many heavy vehicles are constantly moving around. StrongSORT can monitor these vehicles in real-time, help prevent accidents, and ensure efficient operations. Its ability to handle occlusions and variable lighting conditions makes it reliable even in a construction site's dynamic and often chaotic environment.

Challenges and Limitations with Object Tracking

While object tracking technology has advanced considerably, there are still factors that can make getting consistent results difficult. Some challenges and limitations to keep in mind while using these object tracking tools are:

- Occlusion: Occlusion happens when one object hides another, such as two people walking past each other or a car driving under a bridge. It is difficult for systems to track partially obscured objects.

- Variability in Appearance: Objects can look different based on their distance, angle, or size relative to the camera.

- Lighting Changes: Changes in lighting can alter an object's appearance, complicating detection and tracking. Computer vision systems often struggle with these variations.

- Multi-Camera Tracking: Tracking objects across multiple cameras involves a process called ReID, which involves a different set of algorithms.

- Scalability: Scaling solutions for many cameras or dispersed deployments can be complex and expensive, often requiring extra infrastructure or custom development.

Object Tracking

Object tracking technology has come a long way. Today, AI-powered systems can track multiple objects with high accuracy, even in challenging conditions. Object tracking and computer vision have a wide range of applications, from improving processes in manufacturing to enhancing logistics workflows. As object tracking continues to develop, we can expect even more exciting advancements in the years to come!

Explore More

- Learn more on how to implement object tracking for computer vision.

- A guide on YOLOv8 for object tracking.

- Learn how to track and count objects using YOLOv8

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Jul 17, 2024). Top 7 Open Source Object Tracking Tools [2025]. Roboflow Blog: https://blog.roboflow.com/top-object-tracking-software/