Introduction

Recent breakthroughs in large language models (LLMs) and foundation computer vision models have unlocked new interfaces and methods for editing images or videos. You may have heard of inpainting, outpainting, generative fill, and text to image; this post will show you how to execute those new generative AI functions by building your own visual editor using only text prompts and the newest open source models.

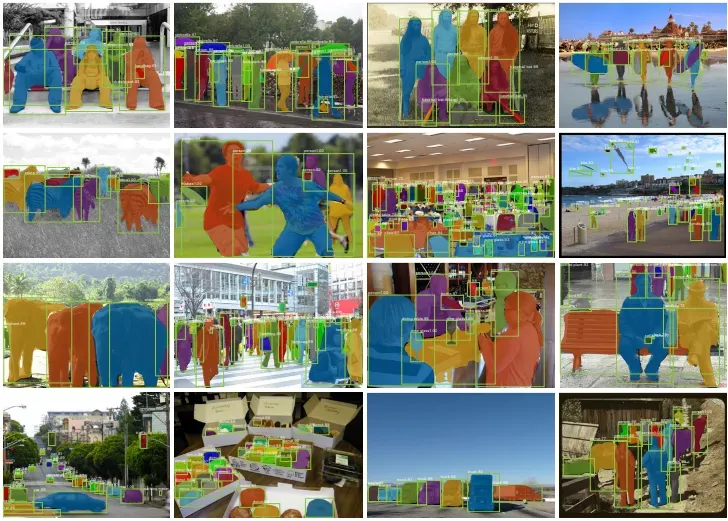

Image editing is no longer about manual manipulation using hosted software. Models like Segment Anything Model (SAM), Stable Diffusion, and Grounding DINO have made it possible to perform image editing using only text commands. Together, they create a powerful workflow that seamlessly combines image zero shot detection, segmentation, and inpainting. The goal of the tutorial is to demonstrate the potential of the three powerful models to get you started so you can build on top of it.

By the end of this guide, you'll be able to transform and manipulate images using nothing more than text commands. This blog post will carefully walk you through a tutorial on how to leverage these models for image editing!

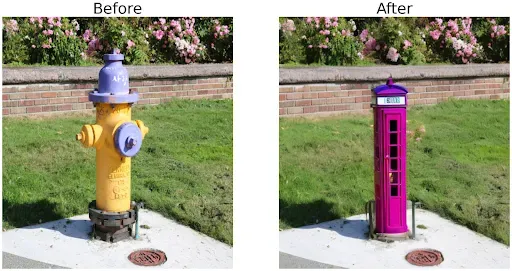

Changing Objects Entirely

Changing colors and texture of objects

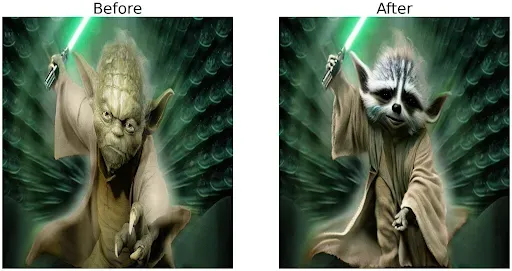

Creative Applications with Context

#Step 1: Install Dependencies

Our process starts by installing the necessary libraries and models. We begin with SAM, a powerful segmentation model, Stable Diffusion for image inpainting, and GroundingDINO for zero shot object detection.

!pip -q install diffusers transformers scipy segment_anything

!git clone https://github.com/IDEA-Research/GroundingDINO.git

%cd GroundingDINO

!pip -q install -e .#Step 2: Detect, Predict, Extract Masks

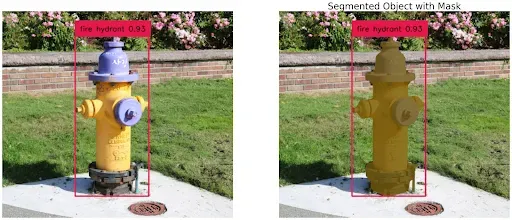

We'll use Grounding DINO for zero shot object detection based on the text input, in this case “fire hydrant”. Using the predict function from GroundingDINO, we obtain the boxes, logits, and phrases for our image. We then annotate our image using these results.

from groundingdino.util.inference import load_model, load_image, predict, annotate

TEXT_PROMPT = "fire hydrant"

boxes, logits, phrases = predict(

model=groundingdino_model,

image=img,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

img_annnotated = annotate(image_source=src, boxes=boxes, logits=logits, phrases=phrases)[...,::-1]

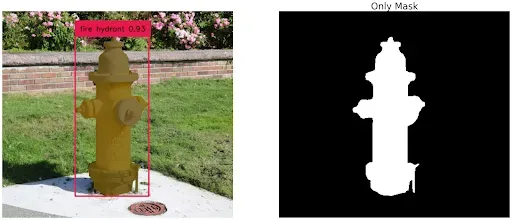

Extract Masks from Bounding Box using SAM

Then, we will use SAM to extract masks from the bounding box.

from segment_anything import SamPredictor, sam_model_registry

predictor = SamPredictor(sam_model_registry[model_type](checkpoint="./weights/sam_vit_h_4b8939.pth").to(device=device))

masks, _, _ = predictor.predict_torch(

point_coords = None,

point_labels = None,

boxes = new_boxes,

multimask_output = False,

)

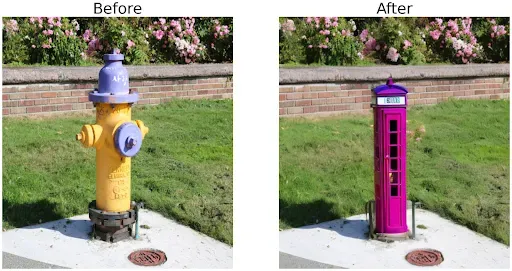

#Step 3: Modify Image Using Stable Diffusion

Then, we will modify the image based on a text prompt using Stable Diffusion. The pipe function from Stable Diffusion is used to inpaint the areas identified by the mask with the contents of the text prompt. Keep this in mind for your use cases, you’ll want the inpainted objects to be a similar form and shape to the object they are replacing.

prompt = "Phone Booth"

edited = pipe(prompt=prompt, image=original_img, mask_image=only_mask).images[0]

Use Cases for Editing Images with Text Prompts

- Rapid Prototyping: Accelerate product development and testing with quick visualization enabling faster feedback and decision making for designers and developers.

- Image Translation and Localization: Support diversity by translating and localizing visual content with alternatives.

- Video/Image Editing and Content Management: Speed up editing images and videos using text prompts instead of UI, catering to individual creators and enterprises for mass editing tasks.

- Object Identification and Replacement: Easily identify objects and replace them with other objects, such as replacing a beer bottle with a coke bottle.

Conclusion

That’s it! Leveraging powerful models such as SAM, Stable Diffusion, and Grounding DINO makes image transformations easier and more accessible. With text-based commands, we can instruct the models to execute precise tasks such as recognizing objects, segmenting them, and replacing them with other objects.

The code in this tutorial provides a starting point for getting started with text-based image editing, and we encourage you to experiment with different objects and see what fascinating results you can achieve.

You might also be interested in using the newer Segment Anything 3 - a zero-shot image segmentation model that detects, segments, and tracks objects in images and videos based on concept prompts.

Full Code

For complete implementation details, refer to the full Colab notebook.

def process_boxes(boxes, src):

H, W, _ = src.shape

boxes_xyxy = box_ops.box_cxcywh_to_xyxy(boxes) * torch.Tensor([W, H, W, H])

return predictor.transform.apply_boxes_torch(boxes_xyxy, src.shape[:2]).to(device)

def edit_image(path, item, prompt, box_threshold, text_threshold):

src, img = load_image(path)

boxes, logits, phrases = predict(

model=groundingdino_model,

image=img,

caption=item,

box_threshold=box_threshold,

text_threshold=text_threshold

)

predictor.set_image(src)

new_boxes = process_boxes(boxes, src)

masks, _, _ = predictor.predict_torch(

point_coords=None,

point_labels=None,

boxes=new_boxes,

multimask_output=False,

)

img_annotated_mask = show_mask(masks[0][0].cpu(),

annotate(image_source=src, boxes=boxes, logits=logits, phrases=phrases)[...,::-1]

)

return pipe(prompt=prompt,

image=Image.fromarray(src).resize((512, 512)),

mask_image=Image.fromarray(masks[0][0].cpu().numpy()).resize((512, 512))

).images[0]

Cite this Post

Use the following entry to cite this post in your research:

Arty Ariuntuya. (Aug 1, 2023). Using Stable Diffusion and SAM to Modify Image Contents Zero Shot. Roboflow Blog: https://blog.roboflow.com/stable-diffusion-sam-image-edits/