If you're searching for a dataset to use or are looking to improve your data science modeling skills, Kaggle is a great resource for free data and for competitions. For example, there's currently an open Kaggle competition detecting American football helmet impacts (crashes) in which you can compete through early 2021. We want to show you how to leverage your own model and Roboflow to hopefully take your model performance to new heights!

There are a few resources we'll hope will be helpful in this case study.

- The blog post you're currently reading. Here, we'll walk through how we tackled this problem.

- The Google Colab notebook we used to fit our Scaled-YOLOv4 model. (We chose this model for its speed, but you can choose whatever object detection model you want.)

- The Roboflow app to easily preprocess and augment your images.

Overview of Problem

While helmets are designed to protect players' heads and necks, there is a lot of concern surrounding football players experiencing traumatic brain injuries (TBI) and/or developing chronic traumatic encephalopathy (CTE). CTE is a disease that may cause, among other symptoms, problems with judgment, reasoning, problem solving, impulse control, and aggression, according to Boston University. Estimates of the percentage of NFL players with signs of CTE are well above 90%, with three studies from 2016 and 2017 estimating 95%, 97%, and 99%.

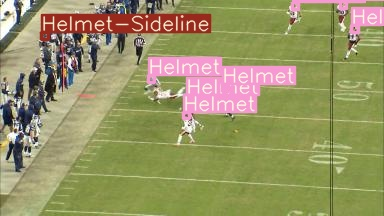

The purpose of this Kaggle competition is to detect helmets on the field and when two helmets connect. By better detecting when two helmets crash into each other, we can start to understand what factors make it likelier that these collisions occur and if changes can be made – for example, to NFL rules – that may decrease the rate at which TBIs or CTE occur.

Preparing the Data

Kaggle provided 120 videos for training. Videos are just a sequence of pictures, so it is possible to splice the video into its individual images. Kaggle has done this for us, so we'll work with the images directly. (If you ever encounter a situation where you have a video and aren't given the images, Roboflow can turn video into images for you!)

In many image problems, you may need to annotate your images. Kaggle has also done this for us! (Roboflow recently launched its labeling feature; you can annotate your images right in the app.)

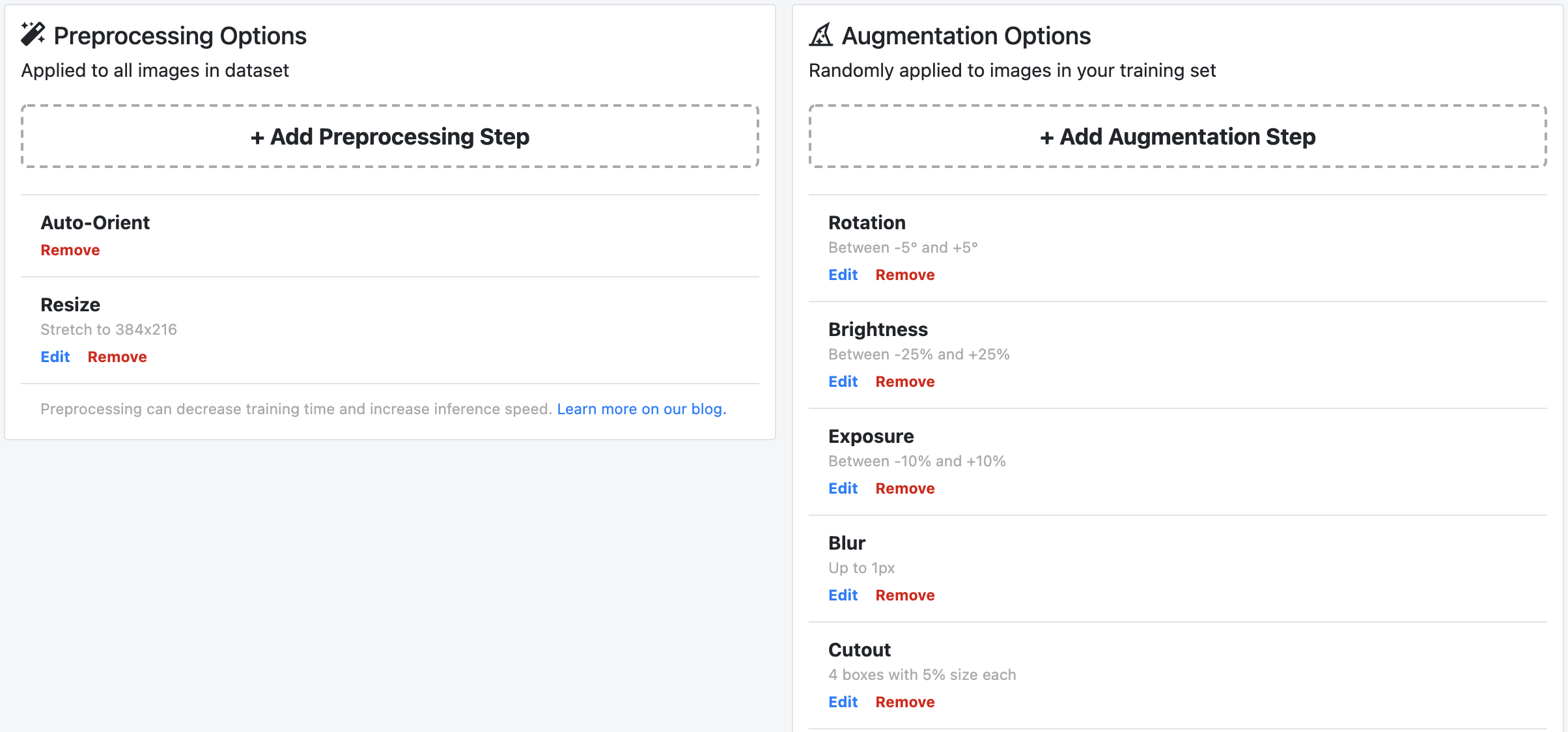

We uploaded the images to Roboflow to pre-process and augment our images.

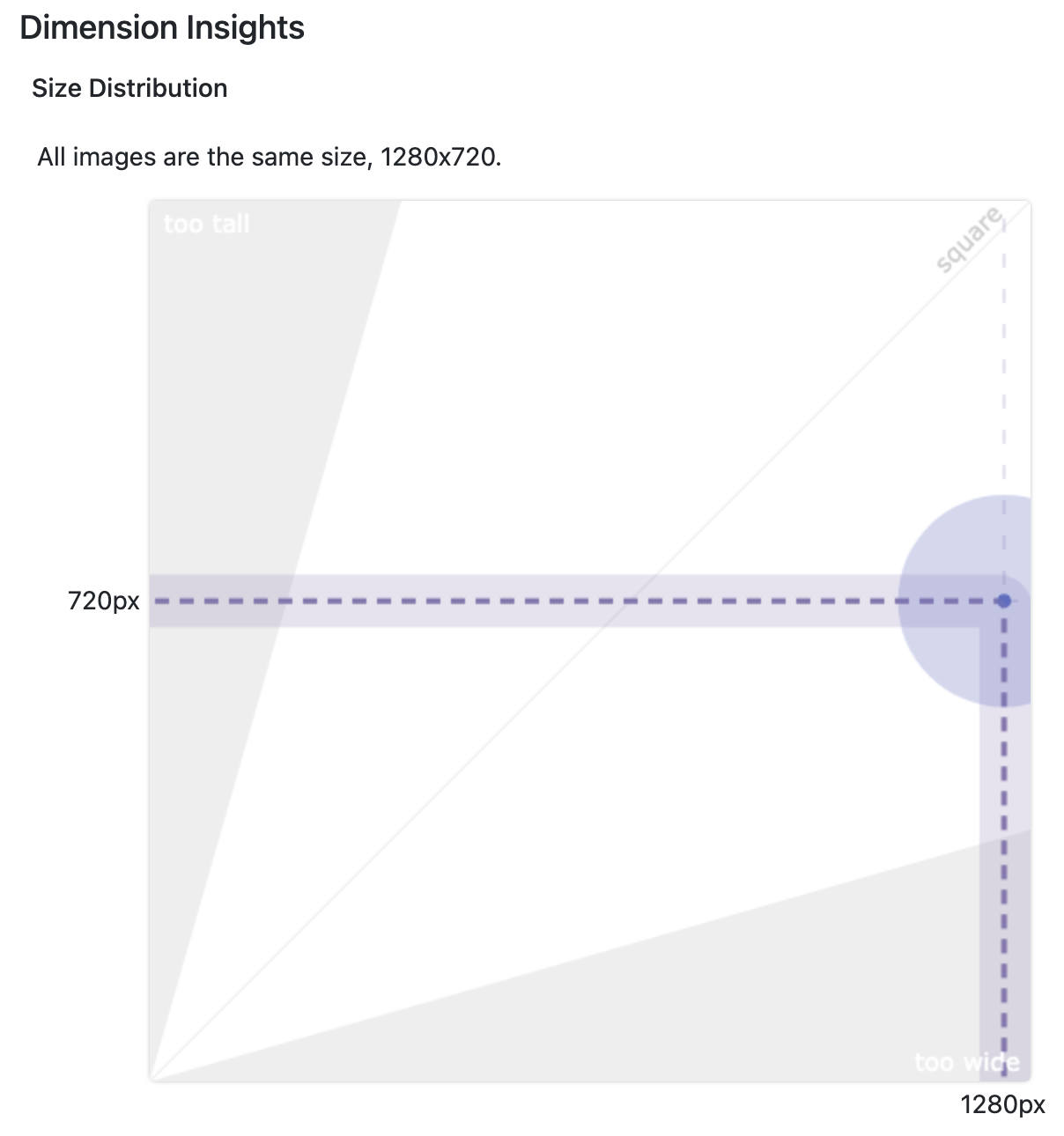

- Image Preprocessing: These are steps that you want to apply to all of your images. For example, if we want to convert our images to greyscale or re-size our images. In the Kaggle data, our images were very large: 1280 x 720 pixels. It's good to have images that are of high resolution (blurry photos aren't very useful), but that high resolution can require significantly more time to train your model. If you think about each pixel as an input to your model, there are over 920,000 inputs to your model! We used Roboflow to resize our images to be smaller (384 x 216) while keeping the same aspect ratio.

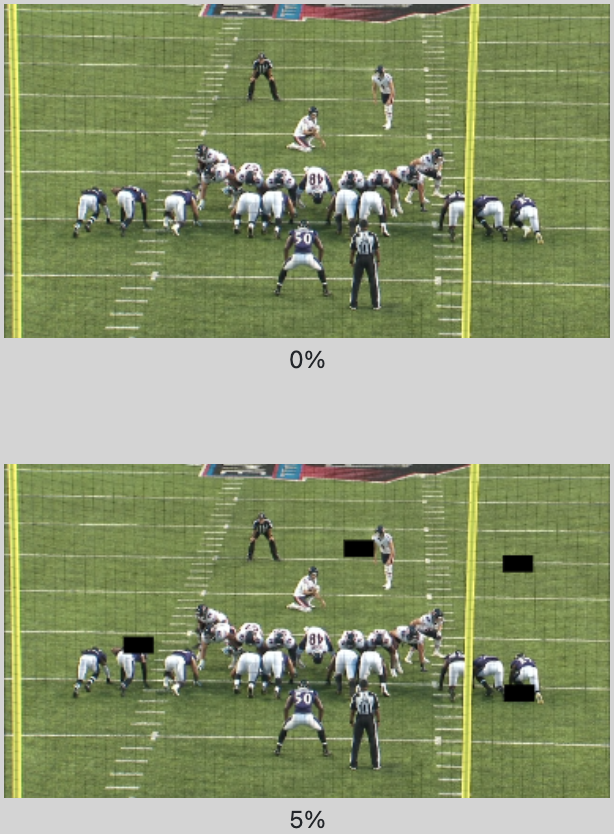

- Image Augmentation: These are steps you want to apply to only your training images so that your model can better generalize to unseen images. To the Kaggle data, we added random rotation, brightness, exposure, blur, and cutout to our image. Each of these techniques is designed to simulate real-world differences we might see in our images. Take cutout as an example. Our goal is to detect helmets and collisions. Two helmets may collide, but it could take place behind another player, thus out of sight from the computer. The cutout technique randomly places black boxes around the image to reflect natural blockages that may be in the image, like a collision that isn't viewable by the camera. In the bottom image, we see a copy of the top image but with a small amount of cutout applied to the image.

Modeling

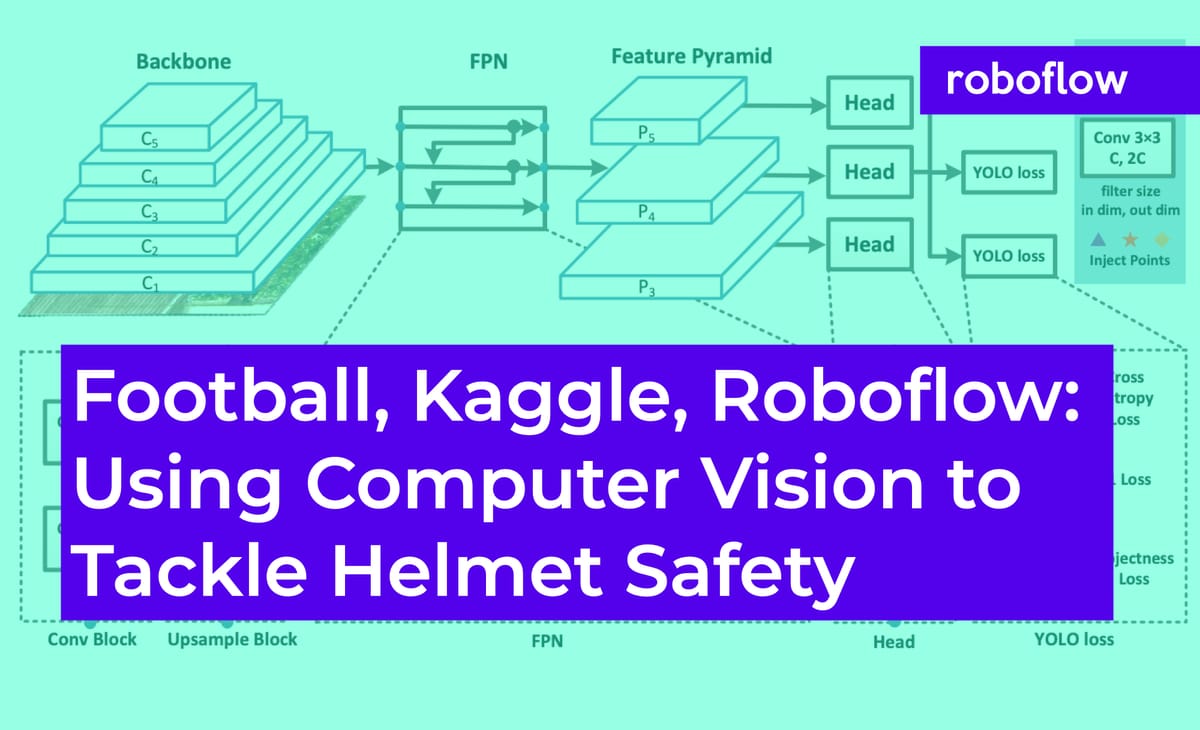

There are, of course, many different models out there. As of this writing (December 2020), the state-of-the-art computer vision model is Scaled-YOLOv4. Scaled-YOLOv4's speed and accuracy are superior to all other models, based on benchmarks. Thus, we fit a Scaled-YOLOv4 model to our data. You can follow our Google Colaboratory notebook here.

Note that you will need to make a copy of the notebook and you will need to get your own data with your own API key from Roboflow. This Scaled-YOLOv4 video tutorial will cover that in detail!

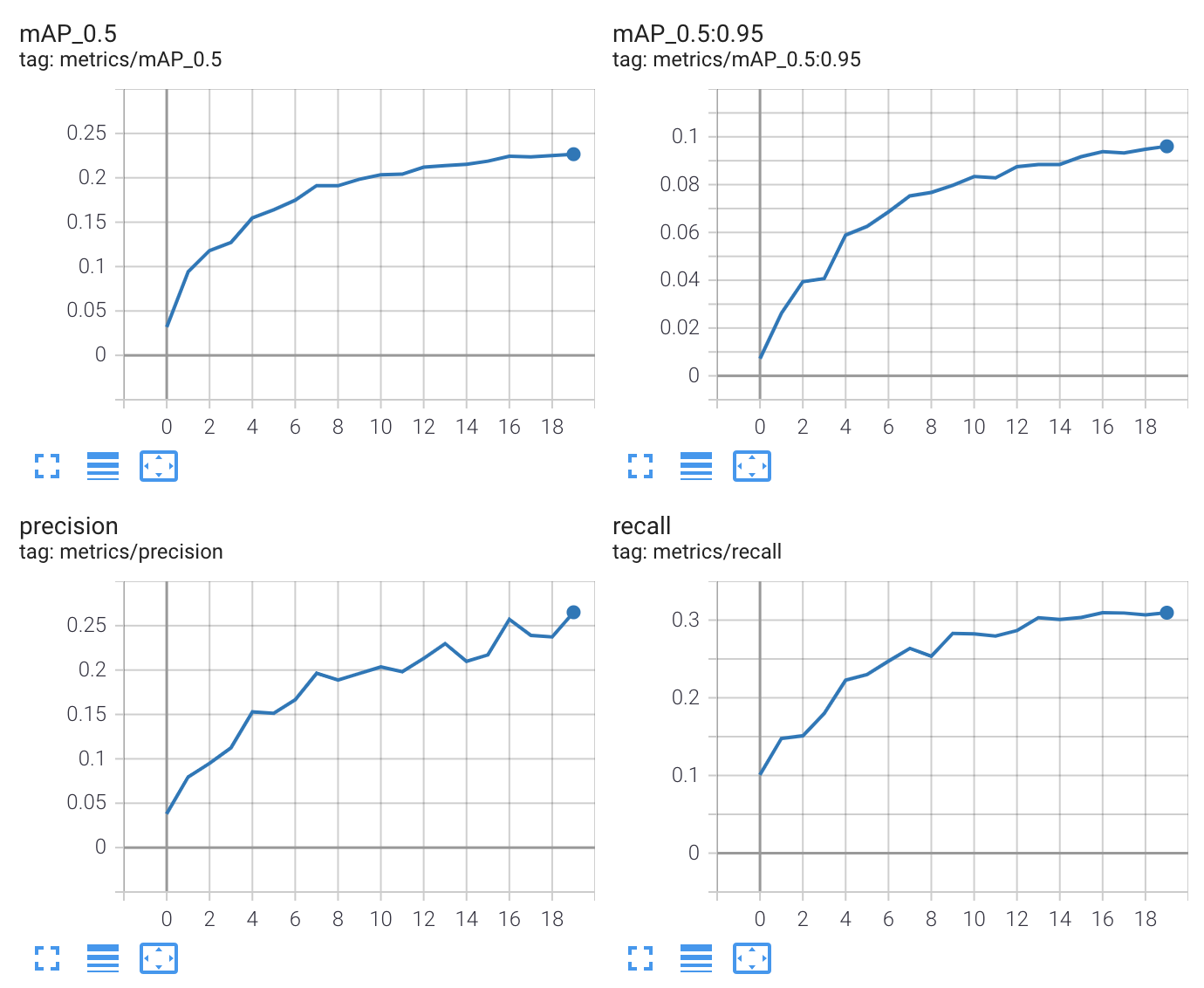

We ran our model for 20 epochs. It's not uncommon to see the number of epochs be well above 1,000 for object detection tasks, so there's a lot of improvement that can be made simply by increasing the training time. However, this also takes a significant amount of time – 20 epochs took approximately 2.5 hours on our Colab notebook with GPU, so attempting 1,000 on the same setup would have taken approximately five full days. The plots below align with the belief that more epochs would yield improved performance, as the metrics are continuing to improve as the epochs increase. (This is mirrored in both training and validation performance.)

Once we built our model, we generated predictions (often referred to as "inference" in computer vision) on the test set. Generating predictions on 995 images took 25.4 seconds, or meaning that inference can be conducted at roughly 40 frames per second, or FPS. That's just shy of real-time – the original data was shared at about 60 FPS, but 40 FPS should be more than sufficient for the television replays that we see in American football games.

Conclusion

As it relates to NFL and helmet detection, the goal of reducing TBI and CTE is certainly a noble goal. It may be surprising that computer vision can be used to better understand this issue, but computer vision has a ton of applications – it's not all self-driving cars and white blood cell detection. There are currently over 400 teams tackling this specific NFL problem, with many more teams tackling other problems.

More broadly, Kaggle is a good place to flex your data skills. Right now (December 2020), there are plenty of competitions that allow you to incorporate external data (like this one on detecting improperly placed catheters and chest tubes in X-rays), so if you want to use and upload any data that you've augmented and pre-processed in Roboflow, take full advantage of that! If you do, be sure to let us know – we love hearing how Roboflow is being utilized and want to help you show off your work.

Cite this Post

Use the following entry to cite this post in your research:

Matt Brems. (Dec 28, 2020). Football, Kaggle, Roboflow: Using Computer Vision to Tackle Helmet Safety. Roboflow Blog: https://blog.roboflow.com/football-kaggle-computer-vision-safety-scaled-yolov4/