Today, we are introducing new tools powered by Segment Anything 3 (SAM 3) that significantly change how people build computer vision applications.

SAM 3 is a powerful vision foundation model that detects, segments, and tracks objects in images and videos based on prompts. See our detailed SAM 3 model overview to learn more about what the model can do.

With SAM 3 integrated into the Roboflow platform, you can combine text input with the model's capabilities to rapidly create production-ready custom visual applications. In partnership with Meta, SAM 3 is now integrated into every part of the Roboflow ecosystem.

We believe the speed at which you can now create vision applications or tune your own models marks a major step forward for visual artificial intelligence. If you've tried building a vision application in the past and failed, now is the time to try again. It truly is a new day for vision AI.

Try SAM 3 right here on this page

Want to try out SAM 3? Simply upload an image in the below window to see what it can do.

Let’s explore the latest releases.

The fastest way to start using SAM 3

SAM 3 is a highly capable model out of the box. With a simple prompt, it can accurately detect and segment a huge range of objects. Roboflow is introducing tools that make it easy for you to use SAM 3 by integrating it into our infrastructure for running vision applications in the cloud or on edge hardware. This allows you to integrate the SAM 3 model into your applications immediately.

Test and Compare SAM 3 against other models

With Roboflow Playground, you can test SAM 3 on images for free, no login required. The playground allows you to explore how foundation models handle visuals, prompts, and tasks.

SAM 3 is available to compare against other models, including Gemini, Claude, Grok, and Qwen. We recommend testing all models before starting your application, as model performance can vary widely depending on your data and use case.

Use the SAM 3 API endpoint

SAM 3 is compute intensive. Roboflow has a dedicated SAM 3 endpoint, backed by our scalable cloud infrastructure. You can access SAM 3 for scaled applications without the overhead of building and managing the infrastructure yourself.

Deploy SAM 3 locally or in your private cloud

SAM 3 is an open model and available for local deployments. Roboflow Inference makes it easy to use SAM 3 locally or in your private cloud. Inference efficiently processes video streams, optimizes resources, and manages dependencies for you. You can add logic, transform data, send triggers, chain other foundation models, and develop a custom pipeline with SAM 3.

Build vision applications with SAM 3

The output of SAM 3 when prompted is segmentation masks. Roboflow Workflows has over 100 pre-built functions that let you prototype, test, integrate, and deploy pipelines to production for complex vision problems.

You can combine custom models, open source models, LLM APIs, pre-built logic, and external applications. You will receive the final result from your Workflow in the format you want, such as JSON.

Create a custom SAM 3 endpoint with a few images

SAM 3 is ideal for developing a fast proof of concept for a custom object detection or instance segmentation model. With just a handful of images, you can have a SAM 3 endpoint tailored to your specific objects. This endpoint lets you begin building your vision application immediately without spending time on labeling data or waiting for a custom model to be trained. This is perfect for fast prototyping.

Create a custom SAM 3 endpoint with Roboflow Rapid.

Accelerate Model Development with SAM 3

While SAM 3 is highly capable out of the box, there are times when you may want to tune the model to perform better in your specific situation or use SAM 3 as a tool to label data for training other models.

Fine-tune SAM 3 with custom data

If you want SAM 3 to improve its ability to recognize certain objects, you can create or upload a custom dataset to fine-tune it for your use case. This tailors SAM 3’s innate understanding of objects more specifically to the problem you are solving. Fine-tuning is important when you need the model’s accuracy to improve for production environments.

Read our guide to fine-tuning SAM 3.

Use SAM 3 to automatically label image data

With SAM 3’s ability to identify objects using text inputs, you can use SAM 3 to automatically label a dataset for training other model architectures. Creating a custom dataset with SAM 3 lets you transfer its knowledge to models better suited for your environment, which is often done when your use case requires faster processing or running on less compute.

Automatically label data with SAM 3.

Speed up manual data labeling with SAM 3

Even when text prompts don't perfectly identify your objects, you can use SAM 3's general understanding of object shapes to label unique datasets. This method uses physical points (just clicking your mouse) on images to guide SAM 3 to generate a mask around your objects. This speeds up the labeling process, as SAM 3 often provides a complete mask in just one click.

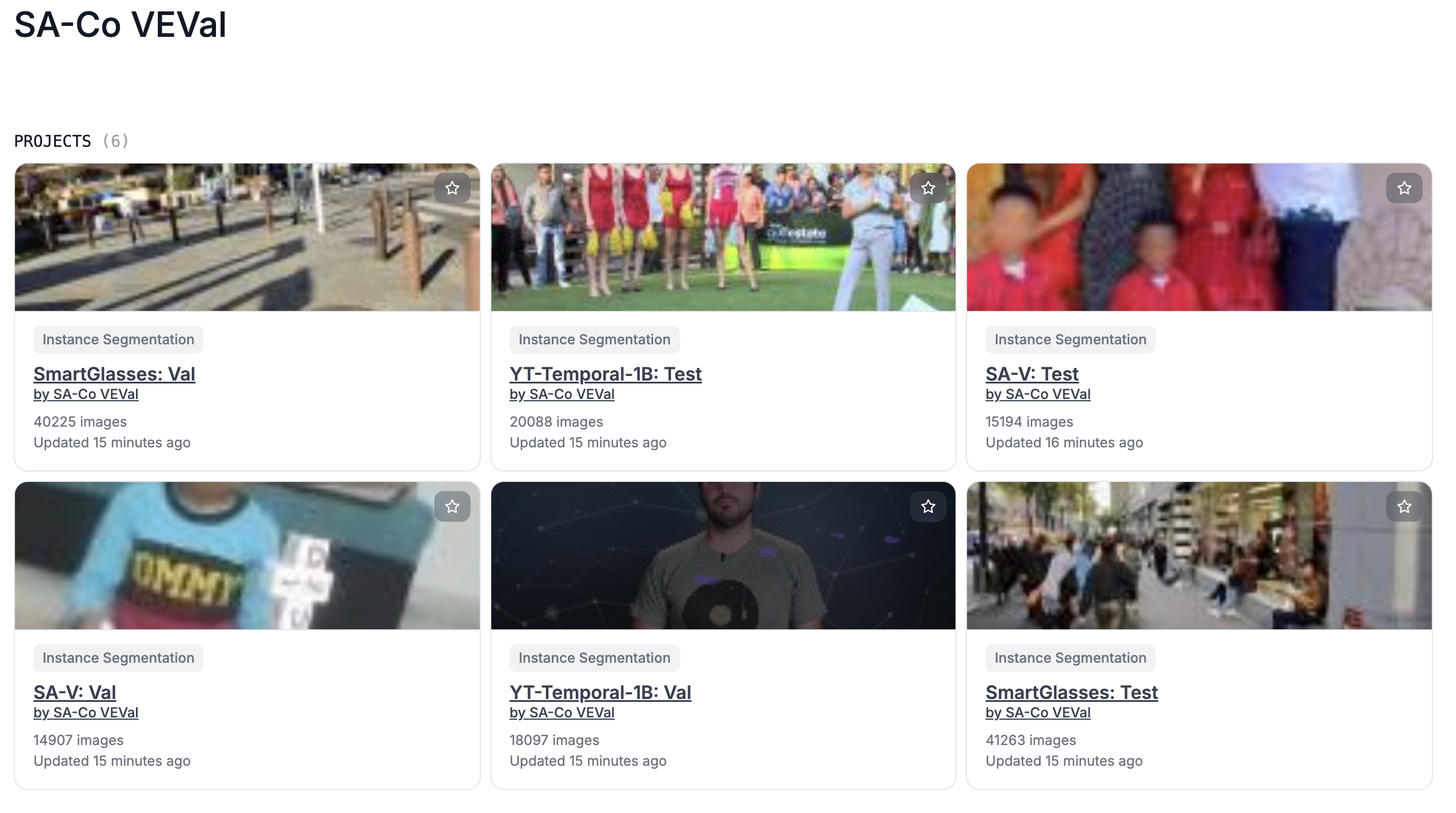

SA-CO, a new vision benchmark

Along with SAM 3, Meta created a new benchmark, the SA-Co evaluation benchmark, which you can explore on Roboflow Universe. This new benchmark has 214K unique phrases, 126K images and videos, and over 3M media-phrase pairs with challenging hard negative labels to test open-vocabulary recognition.

It consists of several splits: SA-Co/Gold has seven domains and each image-noun phrase (NP) pair is annotated by three different annotators; SA-Co/Silver has ten domains and only one human annotation per image-NP pair; SA-Co/Bronze and SA-Co/Bio are nine existing datasets either with existing mask annotations or masks generated by using boxes as prompts to SAM 2.

Text in, visual intelligence out.

The era of hard-to-build vision apps is over. Every step in the vision process can be accelerated with AI. SAM 3 contains immense knowledge, and Roboflow’s tools help you access the power of Meta’s latest foundation model.

Access SAM 3 with Roboflow today.

Cite this Post

Use the following entry to cite this post in your research:

Trevor Lynn. (Nov 19, 2025). Launch: Use Segment Anything 3 (SAM 3) with Roboflow. Roboflow Blog: https://blog.roboflow.com/sam3/