This post is a guest post written by Brian Egge. Brian works in finance, though this is a personal project.

Many households are getting more packages delivered than ever before. In my neighborhood the delivery drivers don’t ring the doorbell or announce they’ve dropped a package off. Many people have concerns of so-called “porch pirates,” but my concern is getting to the package before my dog opens it. There is at least one commercial service which will notify you of packages, but it requires a monthly subscription and uploading your video to the cloud. With a $100 Jetson Nano and a $100 IP Cam, I am able do this locally.

The following video dives deep into using the Jetson as an inference device:

I found it easier to train an AI model than to train my dog.

I started learning about computer vision after receiving a Jetson Nano a year ago. I began programming on the Apple II and feel there has never been a more exciting time to be a computer programmer.

Motivation

I knew I wanted to do this project when I first installed the camera above my door, so I started saving snapshots of packages after they were delivered. Once I had about 15 images I labeled them (first in Azure’s Custom Vision, which I later exported to Roboflow).

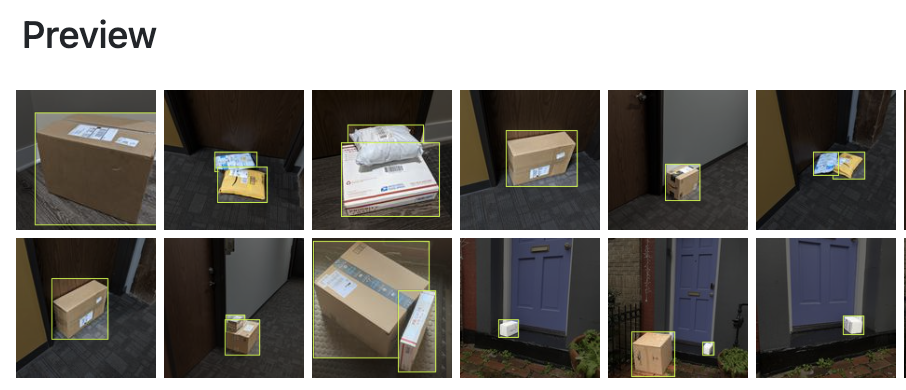

15 images might not seem like nearly enough, but it was enough for a proof-of-concept on which I could start iterating. (Side note: Roboflow has made available a package dataset and written about using public data to improve your models!)

When the system would not detect the package, I would save a snapshot and add it to the training set. I even did some manual data augmentation by moving a package around and taking multiple pictures of it. (Custom Vision did most of what I wanted, but limited me in that I could not adjust data augmentation in any way. Roboflow has image augmentation available -- image flip, rotation, and brightness seem to make the most sense in this case, and I’ve experimented with mosaics.)

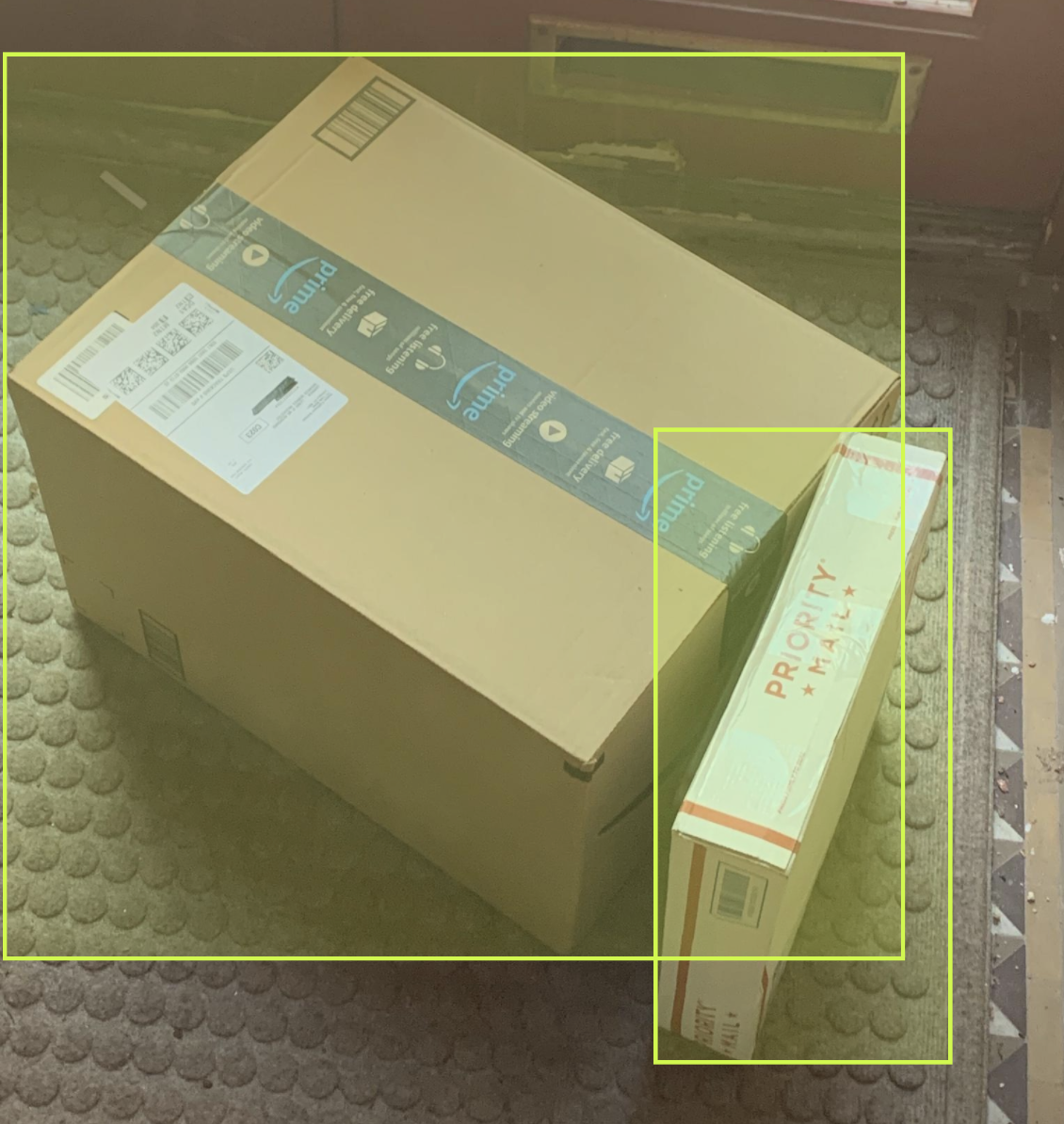

An image classifier (simply predicting package versus no package) might be easier to train than an object detector, but I like to have an image pushed to my phone with a zoomed in view of the package. The object detector bounding box makes this easier!

Modeling

My problem is not a difficult one to solve, but I want my number of false positives (incorrectly signaling that there are packages when there aren’t any) to be near zero. I also hope that the total accuracy is above 95%.

Fitting many object detection models to my dataset allowed me to experiment with what works best -- though that runs the risk of overfitting.

- I started with YOLOv2-tiny, which is a 416x416 model. When I run the detection, I change my model shape to be 1344x768.

- I’m now training YOLOv4-tiny and trying different model sizes.

Packages come in all different sizes, and the delivery people don’t always place them close to the door, so it’s important to be able to detect at multiple scales.

I was happy with my model being fit to packages on my porch, but it turns out my model indeed was overfit. When December came, I found that Christmas decorations and snow caused an increase in false positives -- that is, my model thought there were packages outside when there really weren’t.

Deployment

Deployment has been a huge challenge. It took me many hours to figure out how to convert it to ONNX and then to TensorRT. I was able to get it all working with the help of this repo. I’m currently polling the camera for a 4k image every four seconds. I could have the cameras ftp an image each time they detected motion, which would also work. For package detection, I’m happy to have slow processing time if it means higher accuracy.

Moving forward, I’d like to see if I can get a full sized model to run on the Jetson Nano, trading slower speed for higher accuracy. I’d also like to learn more about transfer learning. It seems I should be able to start training a small model and increase its size later in the training. I’d also like to learn how to resume training after adding images to the dataset -- something that Roboflow is currently working on!

Are you building something with Roboflow and you'd like to show off your work? Answer three quick questions here and we'll be in touch.

Cite this Post

Use the following entry to cite this post in your research:

Matt Brems. (Jan 19, 2021). Using Computer Vision to Detect Package Deliveries. Roboflow Blog: https://blog.roboflow.com/using-computer-vision-to-detect-package-deliveries/