Amitabha Banerjee used YOLOv5 and Roboflow to teach his Anki Vector robot to detect other robots. This is not only a fun project to teach machine learning but it could have real world implications as well. In the future, we'll want our robots to be able to collaborate and work on tasks together and to do that they will first need to be able to recognize each other!

He originally published a writeup of his work on Towards Artificial Intelligence and was kind enough to provide some additional information about his project and pipeline.

Background

The Anki Vector is a small, inexpensive, AI-powered, cloud-connected robot. According to Amitabha, it's "the cheapest fully functional autonomous robot that has ever been built."

He runs an online course teaching AI with the help of the Vector robot. While the robot has some AI built in already (for example: it uses speech recognition to connect with Amazon Alexa, and has the ability to recognize humans via its onboard camera), it does not come pre-programmed to recognize other robots. Amitabha wanted to see if he could add that capability by training his own machine learning model.

The Process

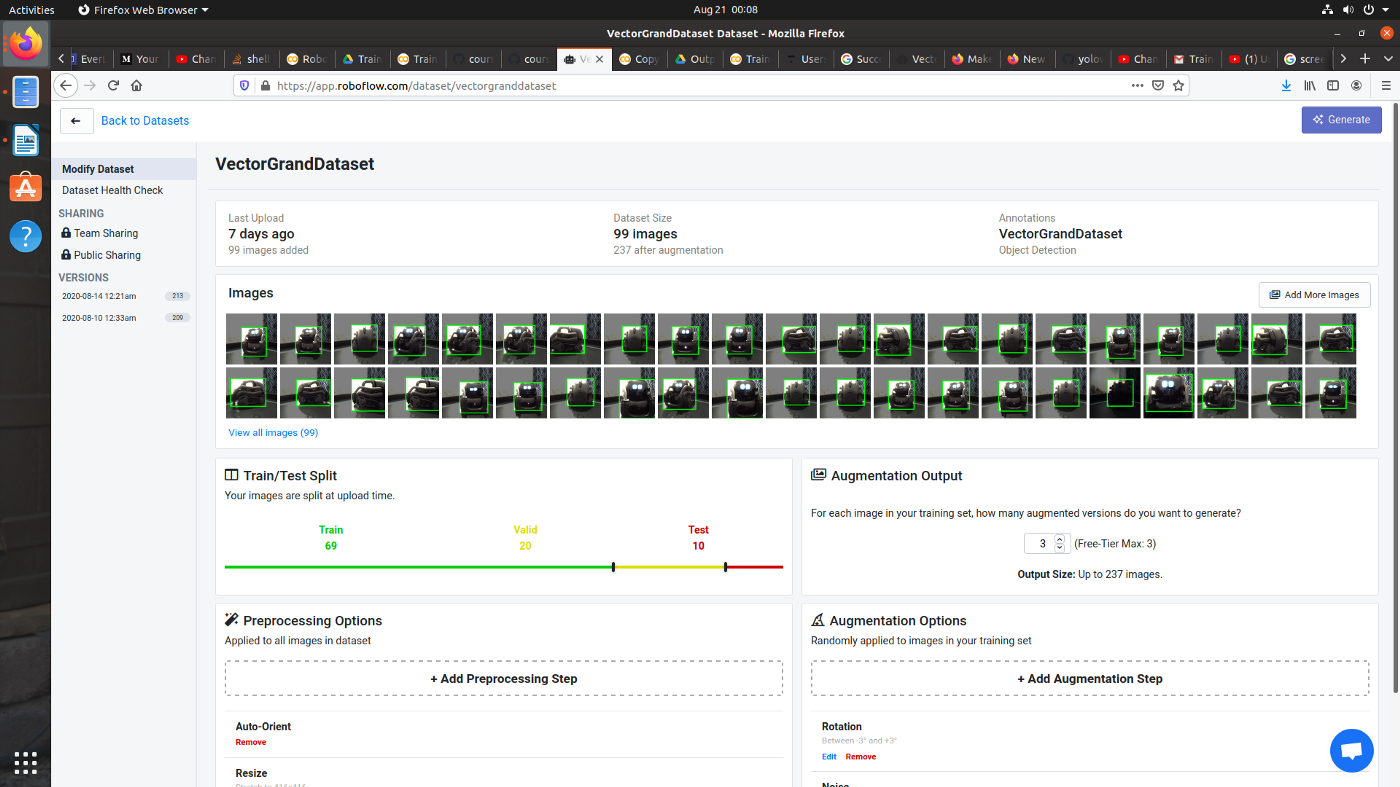

To realize this vision, Amitabha first had to collect training images of other robots from the Vector's onboard camera. He captured 303 images of robots in a variety of orientations with various lighting conditions and backgrounds. He then used LabelImg to annotate the images by drawing bounding boxes around the robots.

After collecting his labeled dataset, he uploaded it to Roboflow to create train, validation, and test splits and applied a variety of preprocessing steps to prepare his images for training and augmentations to generate more examples for his model to learn from.

The specific pre-processing steps applied were: auto-orient, resize (416x416), auto-adjust contrast, and grayscale. Augmentations applied were: rotate (+/- 5°), blur (0.5px), noise (5%), and bounding-box only brightness (+/- 25%).

He then used the Roboflow YOLOv5 Google Colab notebook as a base to train his model for 50 epochs with a T4 GPU. Inference was done on a video recorded from the Vector's camera. Full results are available in his modified Colab Notebook.

Results

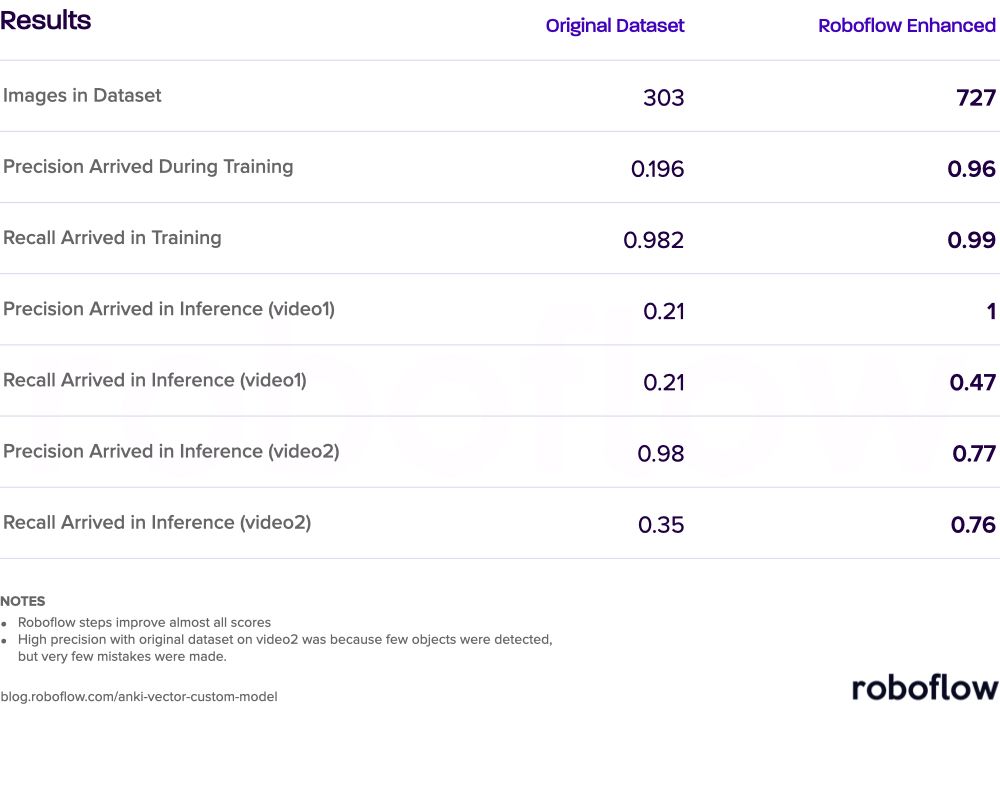

The results of training were quite good! And the augmentations from Roboflow improved almost every validation metric.

Amitabha ran his trained model against two 30-second video clips (one with a similar background to the training data and one with a very different background); the results are as follows:

Try it Yourself

Thanks to Amitabha's hard work and generosity, you don't even need an Anki Vector to try your hand at training a model to detect robots! He's graciously shared his labeled robot object detection dataset via Roboflow Public Datasets under a Creative Commons license.

Next Steps

The company that acquired the IP for the Vector has promised to release its firmware as open source in the near future. Amitabha hopes to be able to deploy his trained model to run natively on the robot's hardware.

He also wants to try other preprocessing and augmentation steps in Roboflow to see if he can improve his model's generalizability (particularly in detecting the robot reliably amidst new settings and backgrounds).

He also hopes to see others take up the dataset he's shared and try other models and ideas to improve the performance.

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Sep 22, 2020). Training Robots to Identify Other Robots. Roboflow Blog: https://blog.roboflow.com/anki-vector-custom-model/